Community resources

Community resources

Community resources

Performance for Jira’s team-managed projects just got snappier!

Hi everyone,

I'm Ivan, a Product Manager in Jira Software Cloud. We take customer feedback seriously at Atlassian, and we want you to know that we’ve heard you loud and clear! Performance improvement is always top of mind for us, and our teams have been working hard to identify new methods that will make Jira Software faster.

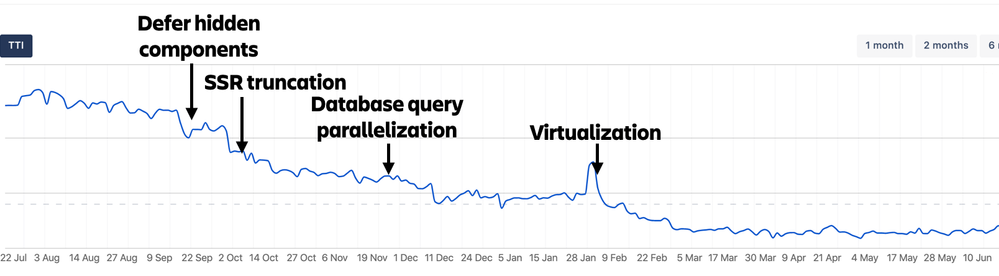

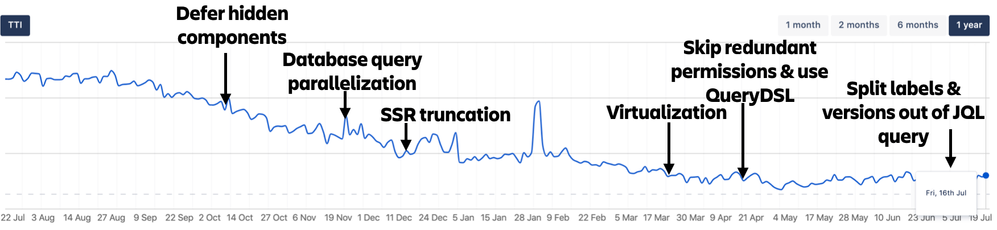

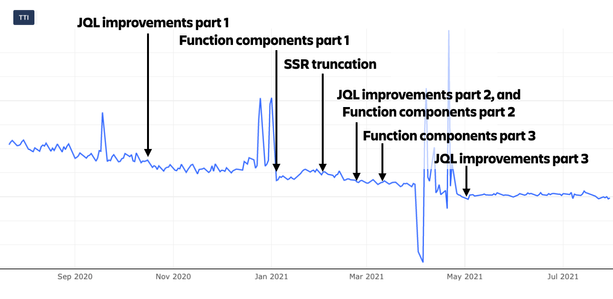

We are excited to share that we’ve made significant performance improvements in team-managed projects' Time to Interactive (TTI) measurement for page loads and interactions, between June 2020-July 2021.

TLDR, here’s a summary of how performance has improved over the last year. The larger your team-managed backlogs, boards and roadmaps, the more noticeable the improvements!

* TTI improvements for Team-Managed Projects' Backlogs:

Experience |

TTI Improvement |

|---|---|

| Opening a backlog | 51% faster |

| Seeing results from quick filters on the backlog | 50% faster |

| Opening the embedded (side panel or modal) issue view in the backlog | 34% faster |

* TTI improvements for Team-Managed Projects' Boards:

Experience |

TTI Improvement |

|---|---|

| Opening a board | 35% faster |

| Seeing results from quick filters on the board | 50% faster |

| Opening the embedded (side panel or modal) issue view in the board | 30% faster |

| Creating issues inline on the board | 50% faster |

* TTI improvements for Team-Managed Projects' Roadmaps:

Experience |

TTI Improvement |

|---|---|

| Opening a roadmap |

44% faster |

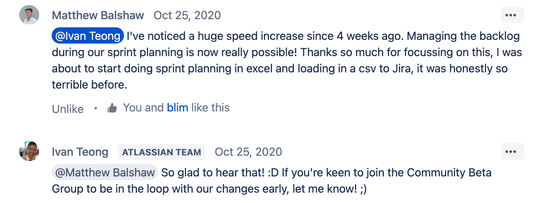

We worked hard to improve performance, and we’re glad that you are already noticing the fruits of our labour!

We know our performance journey doesn’t end here. It remains a top priority to continue improving the customer experience, but we wanted to bring all of you along for the ride. Read more to deep dive into the specifics, and learn how we were able to achieve performance gains in a variety of areas.

Spoiler: It gets really technical from here!

Some context on our technology stack:

-

React.js, with a Redux store

-

Frontend components from Atlassian’s AtlasKit

-

Using a GraphQL API backend

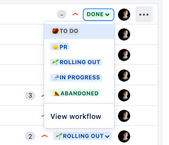

Faster to load your team-managed projects' backlogs, boards and roadmaps

* Graphs show relative improvements and trends over time. The origin of the y-axis is not zero.

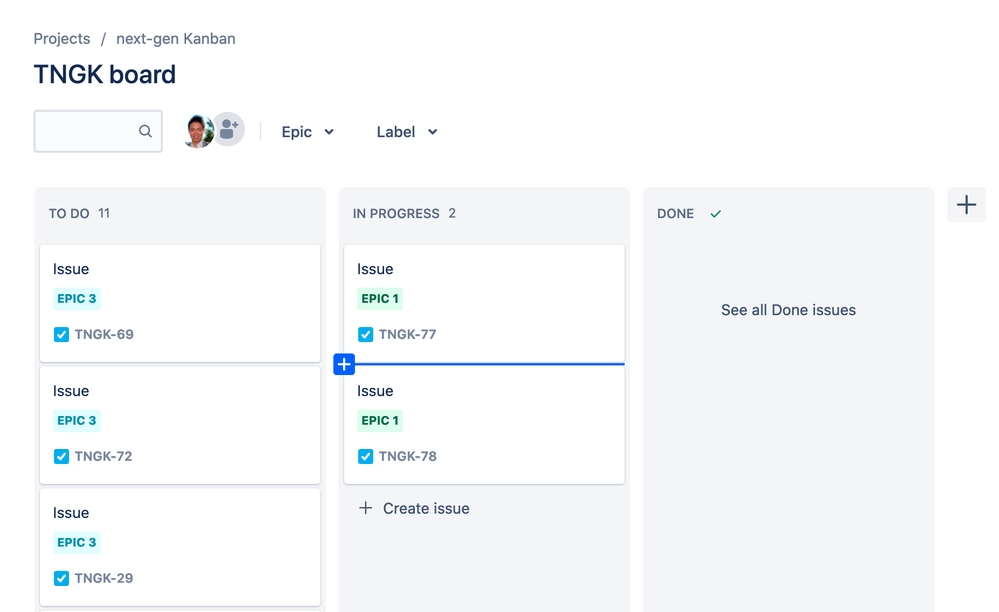

Team-managed backlog TTI performance:

Team-managed board TTI performance:

Team-managed roadmap TTI performance:

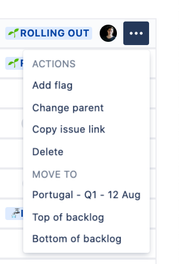

#1: Deferring rendering hidden components for backlog and board

-

Problem: We were rendering every element on every issue, even those that were hidden during page load. This caused a linear increase in page load time as the number of issues grow.

-

Solution: We deferred rendering hidden components to upon a mouse hover over an issue, which signals an intent to interact with an issue. There is less code to download for page load, and browser has less code to parse and execute on the UI thread (or rather, the TTI thread).

Rendered during mouse hover:

|

Backlog |

Board |

|---|---|

|

Card Meatball Menu   |

Card Meatball Menu

|

|

Inline Edit Status, Assignee, Story Points

|

Card Context Menu   |

|

Inline Issue Create

|

Inline Issue Create

|

#2: Truncating number of issues for Jira’s Server-Side Rendering (SSR) for backlog, board and roadmap

-

Problem: We were rendering all the issues in the backlog/board/roadmap through Server-Side Rendering via Jira’s Servers for First Meaningful Paint (FMP), which caused SSR timeouts when it was not possible to do it within the timeout threshold of 3 seconds for larger backlogs/boards/roadmaps. 3 seconds were wasted for overall page load during a timeout, as it results in the skeleton being flushed and everything being restarted on the client side resulting in a 2nd data fetch.

-

Solution: We truncated the number of issues rendered for the First Meaningful Paint (FMP) through Server-Side Rendering (SSR) to 50 issues, in order to prevent SSR timeouts that added 3 seconds. The user’s browser will do the work to render FMP for the issues beyond the initial 50 issues. It keeps the render time for SSR constant with any number of issues.

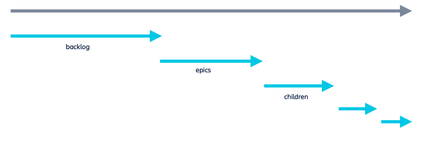

#3: Parallelization of database queries for fetching issues' data for backlog and board

-

Problem: When fetching the data for the lists of issues for epics, sprints, subtasks etc., they are queried sequentially from the database. Hence, they are blocking one another and adding up to a longer time to fetch the data required, which results in a longer TTI (Time to Interaction).

-

Solution: We parallelized the database queries for issue data on the backend for the backlog and board views, so that they are not blocking each other from executing.

|

Previous fetching strategy |

|

|

Concurrent fetching strategy (our change) |

|

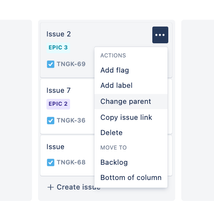

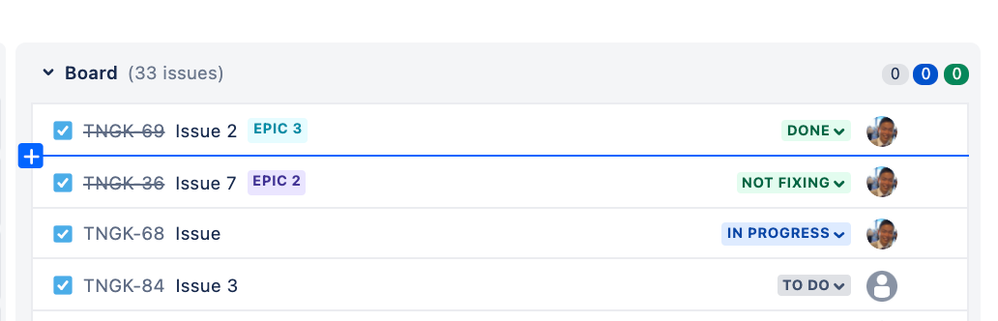

#4: Virtualization of backlog and board

-

Problem: We were rendering all issues upfront, even when they are not within the viewport of the user’s browser. This caused a linear increase in render time for larger backlogs and boards, as well as impacted filtering and drag and drop TTI.

-

Solution: We used a React virtualization library that allowed us to only render issues that are within the viewport of the browser as a user scrolls vertically, plus a buffer of 10 issues outside the viewport.

|

Backlog Virtualization |

Board Virtualization |

|---|---|

|

|

Other changes with larger impact on large backlogs, boards and roadmaps:

- Backlog, board and roadmap:

- Loading frontend bundles async

- Jira Query Language (JQL) backend optimizations

- Backlog and board:

- Skip redundant permission backend checks

- Use QueryDSL instead of ActiveObjects framework

- Roadmap only:

- Switch from React class to function components

- Eliminate extra React higher-order components

Faster to filter your issues on the backlog and board

Filtering TTI for team-managed backlogs and boards also improved by 50% due to virtualization.

- Filters work by filtering issues that are in memory.

-

Pages will still need to be re-rendered upon the filters' change, but there will be less issues that require re-rendering when filters change now due to virtualization.

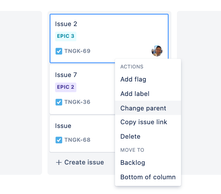

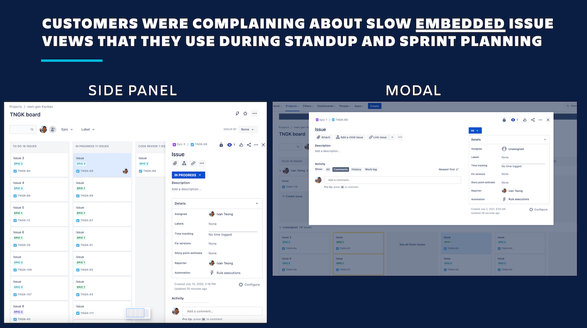

Faster to view your embedded issue views on the board

We also heard your feedback about embedded (side panel or modal) issue views that you have to open during team activities like daily stand-ups and sprint planning.

There was latency when the backlog and board components tried to mount the embedded issue view, so we targeted that mount time.

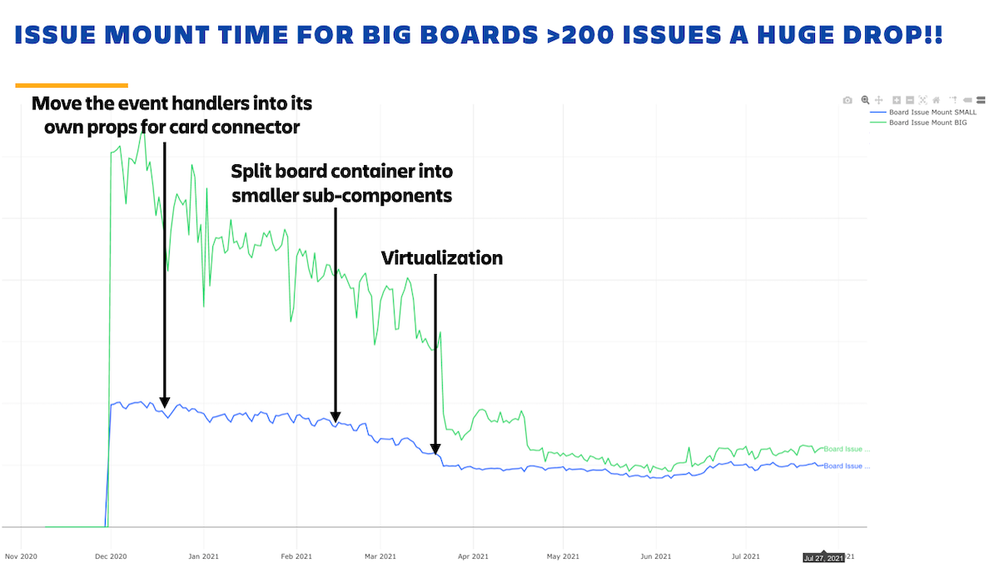

-

Backlog’s issue mount time improved by 47% (resulting in overall TTI of backlog’s embedded issue open to improve by 34%)

-

Board’s issue mount time improved by 54% (resulting in overall TTI of board’s embedded issue open to improve by 30%)

The improvement is even more pronounced for larger backlogs and boards.

-

Issue mount time for bigger boards with > 200 issues dropped by ~80%!

-

We refactored the codebase to eliminate the re-rendering of the entire board whenever an interaction happens, which has downstream effects on mount time.

-

Virtualization also helped.

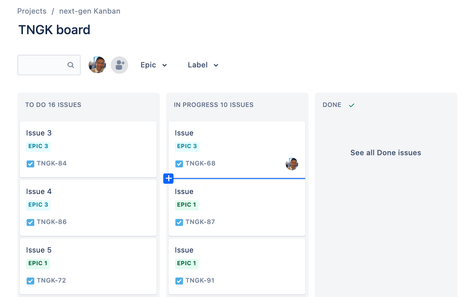

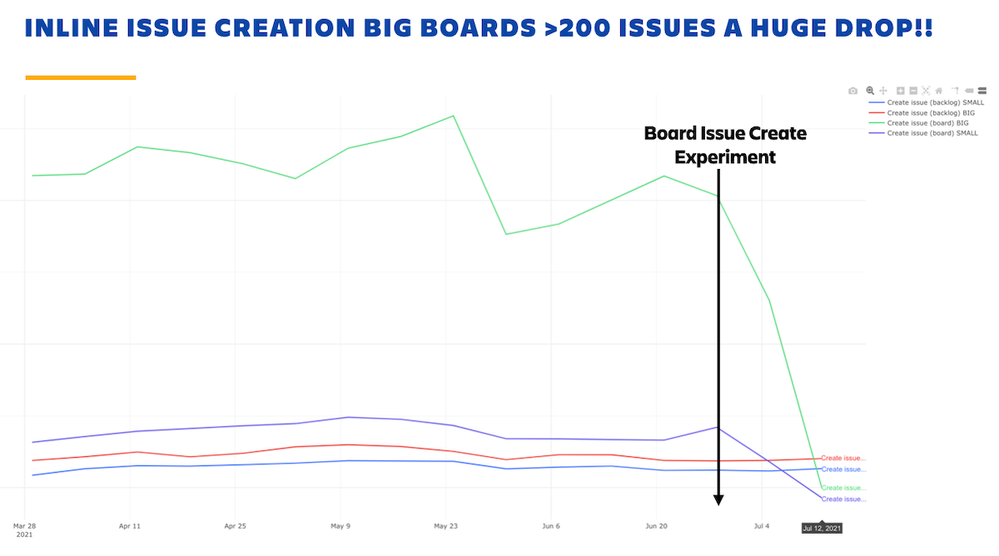

Faster to create issues inline on the board

We also improved the Inline Issue Create TTI on the board by ~50%!

The improvement is even more pronounced for bigger boards > 200 issues, where it dropped by 80% to being almost similar to small boards < 200 issues now.

- First, we eliminated redundant API calls on the software gateway backend.

- Next, instead of updating the entire board (fetch all issues' data on backend) when creating issue inline, we only update the issue that got created (only fetch the new issue ID on backend).

Conclusion

If you’re keen to hear more about other performance improvements across wider Jira teams tackling platform experiences that impact multiple Jira products, please take a look at our blog on 7 recent performance improvements in Jira !

We’ll be continuing our focus on performance and scale over the next year so stay tuned.

Have you noticed our performance improvements? We’d like to know!

Was this helpful?

Thanks!

Ivan Teong

About this author

Product Manager, Jira Software

Atlassian

Sydney, Australia

6 accepted answers

Community showcase

Atlassian Community Events

- FAQ

- Community Guidelines

- About

- Privacy policy

- Notice at Collection

- Terms of use

- © 2025 Atlassian

6 comments