Community resources

Community resources

Community resources

- Community

- Q&A

- Jira Product Discovery

- Articles

- "Don't fuck the customer": what we're changing after the incidents from last week

"Don't fuck the customer": what we're changing after the incidents from last week

Hi all,

Two of the Atlassian values are "don't fuck the customer" and "open company no bullshit". In the spirit of these two values, here's a follow-up to last week's post re: the incidents we had - I thought some of you might be interested in how we deal with things like this. As is often the case in these situations we needed to dig a little bit to figure out if it was just bad luck or if there was something more systemic to fix.

Firstly, what happened? Over the course of a week you saw the following issues:

- Three outages in 2 days, where you couldn't access discovery projects at all

- An issue where you couldn't edit issue descriptions

- An issue where you couldn't edit select/multi-select fields in list views

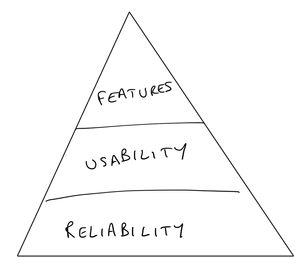

Needless to say, by the end of the week team morale wasn't great. We met and discussed what we can learn from them and what we need to change. First things first, we realigned on the principles we use to prioritize new work: #1 reliability, #2 usability, #3 features (RUF)

There's always a tension between focusing on reliability vs new features at the early stage of creating a new product - we want to move fast to find product-market fit, but if we move too fast we're at risk of introducing bugs and incidents.

For those of you who remember we already had a few incidents over a week a few months back. Since then 30% of each sprint capacity had been allocated to the tech debt, dealing with scale, bugs and improvements. It worked well for us up until last week, but this time around it wasn't sufficient.

What changed since then:

- We increased the surface area in terms of features and things that can go wrong, but we were still relying too heavily on dogfooding and manual testing to identify when things break in staging before it gets to production

- We refactored a number of things in the backend to be able to better deal with scale as it became clear that the app was finding an audience! But doing this without enough automated test coverage was risky.

What didn't change since then:

- We were still using goal-based sprints and trying to hit specific goals each sprint, which served us right to get to market and iterate quickly, but introduced risk as we were sometimes taking shortcuts to get something quick in the hands of users

- Our team has 10 engineers, and we had 1h of meetings a day - 2h on Wednesday for demo and planning. A good chunk of the daily 1h meeting was used for standup, and not enough for sparring and discussions - as a result not enough decisions were debated vs just implemented fast by individual team members.

It became clear that we have reached a phase where this wasn't going to work anymore. So we're changing the following things:

- We're introducing a lot more automated tests. There's a hit list of key features that need to always be working for which we're adding tests now, and over time we'll add a test for every bug we find.

- The split for work moves to 1/3 reliability + techops + tech debt, 1/3 improvements to the existing experience, 1/3 new features.

- We're changing team rituals:

- Standup is now moving to Slack. No need to spend 20-30min discussing status when we can just do it async

- We'll claim the time back in the daily 1h meeting for team sparring, and bring key decisions to the team instead. That's where we'll discuss key risks and how to go about them

- We're moving away from goal-based sprints and into more of a Kanban model for sprint planning. We'll defocus a bit the "let's ship fast" aspect to be more intentional with the changes we make, and see how that works

The things we're not changing at this stage:

- Flat hierarchy / everyone is in every key meeting - at this stage we believe that a shared understanding of everything from user problems to solutions is key

- Few meetings - everyone needs time to get shit done

- The roadmap is loosely defined and changes frequently based on user feedback

We'll do a retro in about a month to see how that worked, and will keep you posted!

Was this helpful?

Thanks!

Tanguy Crusson

6 comments