Community resources

Community resources

Community resources

Building a Comprehensive Data Model for my Organization

Building a Scalable Jira Cloud Architecture: Why We Did It, What We Built, and What Comes Next

Why This Matters

- Inconsistent reporting and terminology

- Limited traceability from request to delivery

- Fragmented workflows and project structures

- Tooling friction that slows down on-boarding and execution

- High Admin overhead due to fragmented and poorly documented configurations

- Cross-team collaboration with consistent workflows and terminology

- Strategic reporting through standardized fields and metrics

- Scalable growth by reducing Jira setup inconsistencies

- Governance and change management that’s transparent and inclusive

What We Built

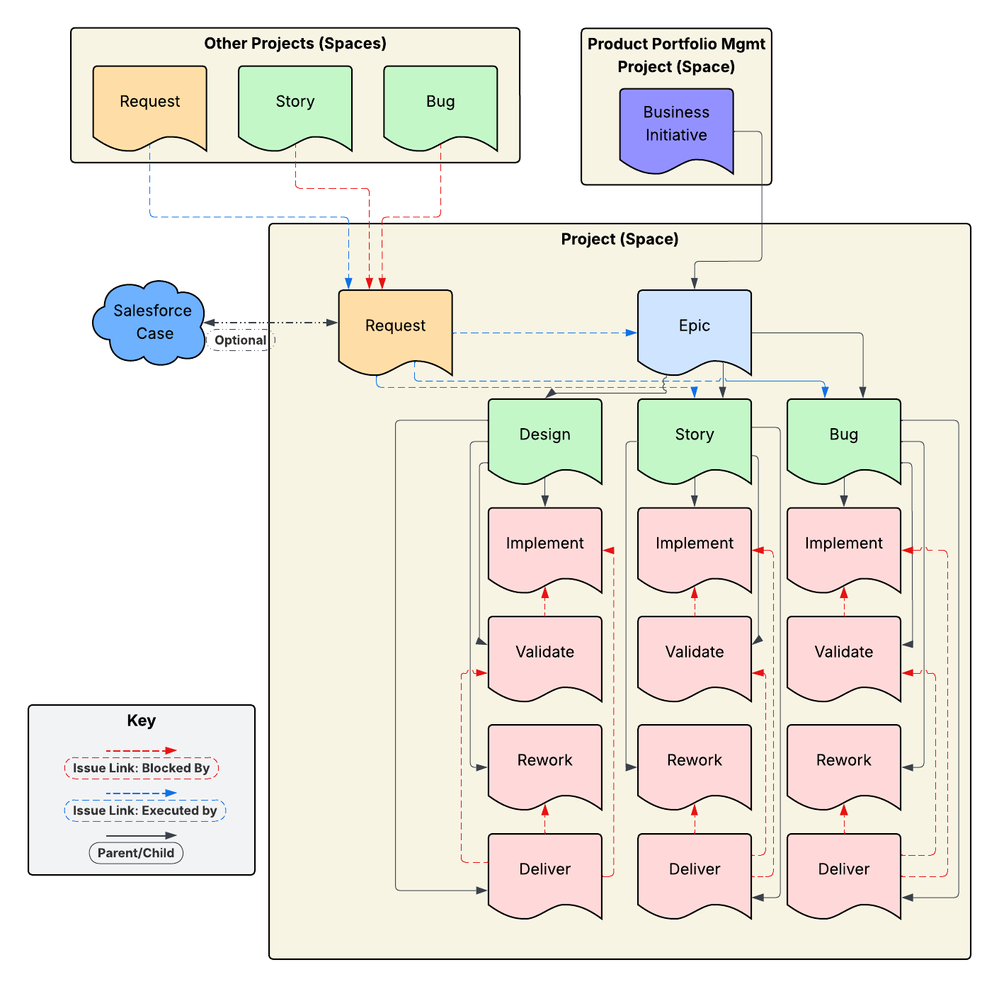

Relationship Overview

Project Types

- Product Development Projects: Deliver products for internal/external customers (long term project: it remains as long as the product is still delivered/supported)

- Service Management Projects: Deliver operational services using existing systems (long term project: it remains as long as the service is still offered)

- Cross-Cutter Projects: Deliver strategic initiatives or compliance outcomes (short term project: its lifespan is determined by the scope and is archived afterward)

- Exploratory Projects: Support PoCs, research, and tech evaluations (short term project: its lifespan is determined by a time-bound period and after is either converted to a Product Development project or is archived)

This means that Projects (Spaces) going forward have a consistent mapping to a Product or Service and there is no longer any question about which project a certain issue needs to be reported. We are leveraging the Team field to assign issues to teams across all Projects, meaning that it doesn't matter which team is working on which product as any issue can be assigned to any team. Team boards are defined by a base "Team" = "Team Name" filter to allow them to ingest issues from any project.

Request vs Delivery Model

Issue Types & Statuses

- Business Initiative: Tracks Strategic level objectives and is the parent of all Epics. (I haven't defined these workflows yet as I'm still working with strategic leadership to understand their requirements).

- Request: Identifies work requests for products and services. Linked to Delivery type work items through an "Executed by" link.

- Epic: Container for scope of work to be executed by a single team. By having only one Epic per team this allows estimation roll-ups to be useful (accounts for team velocity and estimation practices) and it sets clear boundaries of responsibility for the executing teams. Epics are assigned to teams via the Team field.

- Story: Unit of value delivered by team. Must have Definition of Ready, Definition of Done, and Acceptance Criteria to be considered "Ready". Must be linked to a parent Epic. Inherits Team field of parent. Different from the concept of a "Task" because the definition is centered around the value being delivered to the customer rather than the activity that's being completed.

- Design: These are used to track the planning work that must go into an Epic before it can be considered "Ready" by the executing team. Design items will not mark Epics as "in progress" but will block it from being "Ready".

- Bug: These are used to track reports of a product that does not meet its specification. We use affected version field to determine if this bug impacts unreleased product only or if it also affects released products. We use a "NotShipped" version as a marker to indicate the earliest version affected has been identified (as opposed to has not been checked yet). This would look like "Affected Version": "Product1.2.2", "NotShipped" and it would mean this bug affects version 1.2.2 and NOT version 1.2.1 or earlier.

- Implement (subtask): Subtasks are defined by the Definition of Done for any product. This could include architecture definition and documentation, writing code, executing a configuration change, or updating a database.

- Validate (subtask): Our best practices dictate that any story executed should be validated by a different team member before marking as completed. Generally some kind of test case definitions are generated by the test lead for a team and this subtask tracks the execution of those test cases against the completed work.

- Rework (subtask): When the validation process encounters gaps in acceptance criteria, these are logged as Rework subtasks. These can either block Validation (in the case that test cases are unable to be completed due to the failure) or they can be verified independently if the test cases were otherwise able to be completed. If a Product Owner determines that this gap should not block completion of the Story, they can convert this item into a Bug to be resolved at a later time.

- Deliver (subtask): This is the activity that includes any final release requirements in the Definition of Done. Examples can include merging to Main branch, defaulting a toggle to "on" for a feature, creating Release Notes or Change Management documentation, etc. This is often useful for changes that need to be deployed to multiple locations and allows tracking those changes independently.

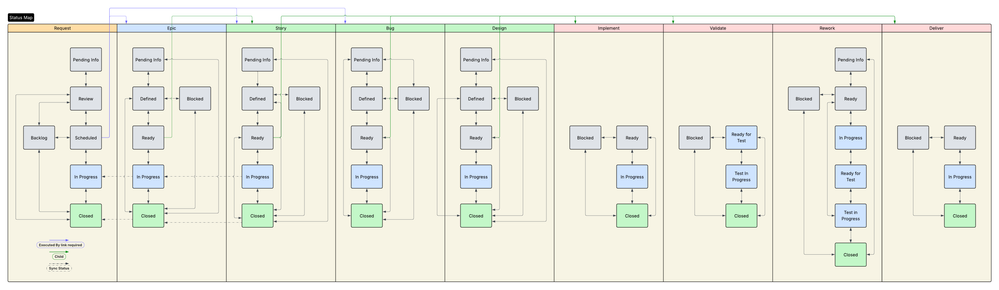

Statuses include the following

- Pending Info: Initial Status to allow "draft" state, also indicates more information is requested to be actionable.

- Review: For Requests - "Product/Service Lead" needs to review for prioritization and disposition

- Backlog: Requests - Item is valuable but not able to be scheduled at this time

- Scheduled: Requests - Item is assigned Target Release and Delivery Items have been created, assigned to teams, and linked to Request via "Executes" link. Request status updates are now handled by Automation rules.

- Defined: All necessary information has been added and item is ready for team review to make Ready.

- Blocked: Item cannot be made Ready until linked item is completed. Linked to Delivery item or Request item by "Blocks" link, depending on if the blocking item is within the same product or a different product.

- Automation: Blocked item is set to Defined when no unresolved "Blocked By" items remain. Triggered by Resolution of item. Comment added indicating when any blocking item is resolved including Fix Version and Target Release date of version. This also applies for subtask items that block each other to move into the Ready state when the dependent subtask is marked as complete (eg. Validate moves from Blocked to Ready for Test when Implement subtask is completed).

- Ready: Team has confirmed all necessary information has been provided and no other work prevents work from starting. Definition of Ready should include Estimates provided, Fix Version selected, Team Assigned. Can be different depending on the work item type that is being marked as Ready.

- Automation: Subtasks can be added to any Story/Design/Bug work items based on Definition of Done when item is moved to Ready status.

- In Progress: Executing work has started.

- Automation: Items are moved from Ready to In Progress when any child items move to In Progress. This also applies for Requests when any "Executing" linked items are moved to In Progress.

- Ready for Test: Validate and Rework subtasks - prerequisite conditions have been met for Validation assessment to begin.

- Test in Progress: Validation is currently in progress.

- Closed: Requires Resolution field to be set, indicates no further activity is required to meet the acceptance criteria of this item.

- Automation: When all "Executing" linked items or all Child items are closed, then an item can be closed automatically. For anyone concerned about items closing implicitly, this can just be tracked with a comment that tags the assignee that all necessary items are complete.

Defect Lifecycle

- Story Defect: Acceptance Criteria has not been met for an In Progress work item. Reported in this model as Rework subtask. This defect is unlikely to impact other teams working on the product since an In Progress item is unlikely to be affecting other executing teams. This can turn into a Release Defect if a work item is completed without fixing this defect.

- Release Defect: Specification has not been met for an Unreleased version of a product. This defect may impact other teams working on the product, but does not impact end users. Identified as Bug work item with Affected Version in Unreleased versions only. This can turn into a Escaped Defect if a version is released without fixing this defect.

- Escaped Defect: Specification has not been met for a Released version of a product. This defect can impact end users. Identified as Bug work item with Affected Version in Released versions.

- Customer Bug: A customer (internal or external) has reported that a specification has not been met for a product they are using. These are tracked as Requests with Request Intent = Fix (as opposed to Feature) and Request Source = External Customer or Internal Customer. These are linked to Bugs (Escaped Defect) or Epics depending on how the executing team needs to organize the work to resolve.

Metrics & Reporting

- Agile Health

- Sprint Completion (Story Points Completed / Story Points Committed)

- Sprint Predictability (Stories Completed / Stories Committed at Sprint Start)

- Sprint Velocity Variance (Avg Story Points of items resolved in cycle)

- Grooming Velocity Variance (Avg Story Points of items made "Ready" in cycle)

- Quality

- Bug reports from each Defect category (Created/Resolved)

- Customer Bugs (Requests: Fix, External/Internal - Created/Resolved)

Jellyfish is a useful tool for tracking these metrics where they aren't directly available within a Jira dashboard or sprint report.

Example End-to-End flow

- Stakeholder files a Request to a Product. In this instance Request Intent = Feature and Request Source = Product Management. Stakeholder completes all requested information and moves the Request to Review status.

- Product Lead assesses all Requests in Review status. This Request is seen as valuable and should be ready to Schedule. Product lead collects any necessary information for prioritization needs, including high level engineering effort estimate, value to end users, and assigns a Target Release version. Product Lead creates Epic, assigns to Team, and links to Request with an "Executing" link. Request status is updated to "Scheduled".

- Epic in "Pending Info" status, where the team Product Owner completes scope definition for that team and creates any Design child work items to track any feature definition required to make the Epic Ready. Design items are assigned to designers as available. Once Design items are complete, the Product Owner completes any remaining details and marks the Epic as "Defined".

- Executing team reviews Epics in Defined status. If there is still missing information they can send the Epic back to Pending Info with missing items identified in the comments. If they are unable to plan the feature until another known item is completed they can move the Epic to the Blocked status and link it with a "Blocking" link to the work item blocking it. Otherwise they can provide a high level Story Point estimate on the Epic and move it to "Ready".

- Executing team, in coordination with their Product Owner, breaks down the work into Stories where manageable chunks of value are defined. Stories are created as child items of the Epic. Once Stories have enough Definition, they are moved to "Defined" where the team reviews and estimates the work. Once all definition is approved and estimates are added, the Story is moved to "Ready." This is when Implement, Validate, and Deliver subtasks are generated for the stories. Implement starts as Ready, Validate is blocked by Implement, and Deliver is blocked by Validate.

- "Ready" Stories are added to a Sprint (for Scrum teams). When the Sprint starts, a Story's executing assignee moves Implement subtask to In Progress. Automation updates the Story to In Progress, and that causes the Epic to be updated to In Progress, and that causes the Request to be updated to In Progress.

- Executor completes their Implement tasks, and that automates Validate to move to "Ready for Test".

- Tester moves Validate subtask to Test In Progress. They identify problems with the implementation so they open a new Rework subtask outlining the failure. Once all details are added, they update the Rework item from Pending Info to Ready. It is assigned by default to the Assignee of the Story (lead executor). Once the Tester completes their test cases, they Close the Validate subtask (resolution set automatically based on transition post-function).

- The Executor moves the Rework subtask to In Progress, then Ready for Test where the assignee is updated to be the Rework item reporter. The Tester then moves to Test in Progress. If the test fails, the Tester makes notes and moves it back to Ready assigned to Executor. If it passes, Rework item is closed (resolution set automatically based on transition post-function).

- Once all other subtasks are completed, Delivery subtask is moved from Blocked to Ready. Once progress is complete on Delivery subtask the Story is Closed automatically with resolution set to Done per transition post-function.

- Once all child work items are Closed, the parent Epic is automatically updated and Closed with a Done resolution (based on transition post-function).

- Once all Epics and other "Executing" linked items are closed, the Request is updated with Fix Version in the comments (along with the Fix Version expected release date per Releases section) and Request is closed with resolution Done.

The above steps also work for Bugs and Design items. Bugs can already exist in a backlog when a Fix Request is submitted, so those can just be linked. Design items are excluded from automatically updating the status of the parent Epic since they are a precursor to full definition of the Epic.

Lessons Learned & Open Questions

- Statuses can easily explode when there aren't clear considerations around which work item types track which activities and definitions. By leveraging a model that designates all Activities as sub-tasks and all Scope in higher level objects, it reduces the number of unique statuses and workflows required to track work.

- A consistently structured data model allows the organization to generate comprehensive and equally applicable User Guide documentation that improves onboarding and cross-collaboration.

- Jira Forms feeding Requests enable Product & Service teams to clearly define the information they need in actionable work requests, and by keeping the Requests defined as the Requestor made them it allows the Requestor to track progress regardless of how complicated the execution might be.

- Using Requests with an "Executing" link type, it allows us to have a many-to-many relationship between work items. This means that a single Epic can address many Requests, many Epics can be used to deliver a single Request, and a Request that requires work from more than one Product group can be Executed by a combination of Epics and other Requests creating a clear dependency chain.

- The Best Practices that come from Engineering Agile practices can be applied to non-Engineering teams as well through this model by framing "Products" as anything a team builds to serve an end user and defining Team Roles in a way that applies to any executing part of the organization.

- Some of this model I've designed seems to have some overlap with Jira Service Management as well as Jira Product Discovery. I'm still working on how to define those relationships that doesn't require every team to have some kind of integration with JSM or JPD.

- Data Administration overhead is a usual complaint about Subtask-heavy workflows, however a consistent data model combined with clever automation solves many of these complaints.

Your Feedback

-

Good Ideas / Bad Ideas

What parts of this structure resonate with you? What feels off? -

Jira Service Management Integration

How could JSM projects complement this model—especially for service teams managing system configurations or internal support? -

Jira Product Discovery Integration

How should JPD requests flow into delivery pipelines? Should we formalize the link between JPD requests and Epics in product projects? -

Governance & Change Management

What governance structures have worked for you? How do you manage change requests and field definitions?

Was this helpful?

Thanks!

Josh McManus

4 comments