Community resources

Community resources

Community resources

Permission denied while accessing Google bucket via bitbucket

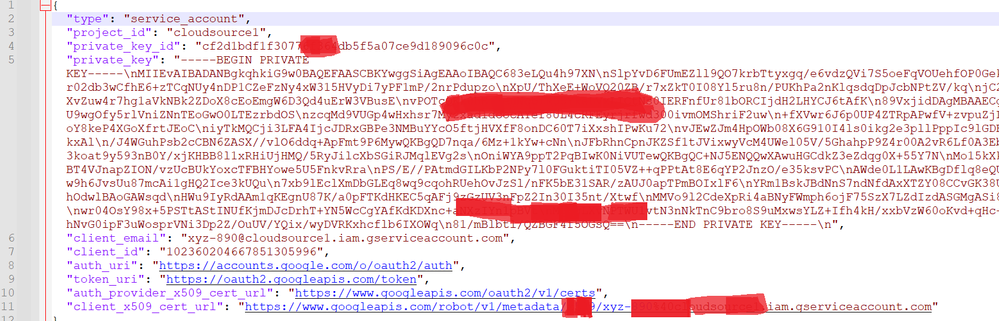

I am trying to setup CI CD with the help of GCP bucket. I Created a Service account and gave it storage object admin rights . I have added a variable as Key from my Service account but when i run the pipeline it is throwing error during transfer process

Error:

ServiceException: 401 Anonymous caller does not have storage.objects.create access to the Google Cloud Storage object. Permission 'storage.objects.create' denied on resource (or it may not exist)

Below is my Yaml file

image: node:16

pipelines:

branches:

main:

- step:

name: Installation

caches:

- node

script:

- npm install

artifacts:

- node_modules/** # Save modules for next steps

#- step:

# name: Lint

# script:

# - npm run lint

- step:

name: Build

script:

- npm run build:production

artifacts:

- dist/** # Save build for next steps

- step:

name : Transfer

script:

- pipe: atlassian/google-cloud-storage-deploy:1.2.0

variables:

KEY_FILE: '$KEY_FILE'

PROJECT: 'cloudsource1'

BUCKET: 'brobucket'

SOURCE: '.'

2 answers

1 accepted

Hello @Robin ,

Welcome to the community!

Based on the atlassian/google-cloud-storage-deploy documentation, it mentions the following about the $KEY_FILE variable :

| KEY_FILE (*) | base64 encoded content of Key file for a Google service account. To encode this content, follow encode private key doc. |

So in this case, could you please confirm if you have base64 encoded the value of the private key before saving it to the $KEY_FILE environment variable ?

If not, you can use the following commands to base64 encode it :

Linux:

base64 -w 0 < my_ssh_keyMac OS X :

base64 < my_ssh_keyWindows :

[convert]::ToBase64String((Get-Content -path "~/.ssh/my_ssh_key" -Encoding byte))Then, save the encoded value as an environment variable named $KEY_FILE at either the workspace or repository level.

Let me know in case you run into any issues.

Thank you, @Robin !

Patrik S

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.