Community resources

Community resources

Community resources

Year-End Retro & Next-Year Planning: A Practical Playbook

There are two kinds of year-end reviews:

- the ones that produce 63 slides and zero change, and

- the ones that quietly fix the two or three things slowing you down.

Let’s aim for #2.

This is a straight-talking guide to wrap up the year, set next-year targets, and build a cadence you’ll actually keep. We’ll start with planning frameworks (plain-English, pros/cons, where they shine). Then we’ll translate strategy into a few timing metrics that tell the truth about flow. Only then do we show how to pull those numbers from Jira (yes—Time in Status helps, but we’ll get there naturally).😌

Step 1 — Pick a Planning Frame You’ll Actually Use

Every framework is a tool. The trick is matching it to your org’s maturity and appetite for change. Use one. Combine two. Just don’t reinvent one in a slide at 1 a.m.

OKRs (Objectives & Key Results)

Best when: you need focus and measurable outcomes across many teams.

What to watch: too many KRs = no KRs. Tie KRs to observable flow signals (e.g., cycle time, carryover, wait time) instead of vague “improve collaboration.”

Example KR: “Reduce average Waiting for Approval time from 3.1 days → 2.0 days by Q2.”

Hoshin Kanri (X-Matrix / Catchball)

Best when: you must align strategy → initiatives → owners → measures, and keep it aligned all year.

What to watch: this is powerful but opinionated; assign an owner to maintain the X-matrix monthly.

Flow-friendly angle: map each “breakthrough objective” to a bottleneck you’ll relieve (e.g., QA throughput, change lead time).

V2MOM (Vision, Values, Methods, Obstacles, Measures)

Best when: clarity beats complexity; you need a shared story with explicit risks.

What to watch: don’t leave “Measures” as vanity KPIs; pick time-based measures that show work moving (or not).

Example measure: “Cut Dev↔QA ping-pong loops by 30%.”

4DX (WIGs, Lead Measures, Scoreboard, Cadence)

Best when: teams need a rhythm of weekly commitments and visible progress.

What to watch: pick lead measures you control (e.g., “% of PRs reviewed within 24 business hours”), not lag-only metrics.

Good scoreboard: a tiny dashboard with Cycle Time, Wait %, Reopens, and Carryover.

Balanced Scorecard (Financial, Customer, Process, Learning)

Best when: executives need a broad view and can sponsor cross-functional fixes.

What to watch: the Process quadrant must track flow, not just “tickets closed.”

Flow entry: time in “Waiting on Customer,” “Blocked,” and approval time are Process bedrock.

Wardley Mapping (capability maturity & situational awareness)

Best when: you’re deciding what to build vs. buy vs. automate.

What to watch: pair it with timing evidence (e.g., how much time you lose in provisioning, testing, or compliance) before big bets.

Short version: pick one framework for direction, then use timing to keep you honest. Counting work makes pretty charts; timing work changes outcomes.

Step 2 — Name the Few Numbers That Tell the Truth

If you only track totals, you’ll only learn you were “busy.” Time shows why things felt busy.

- Lead Time (Created → Done): customer experience and throughput.

- Cycle (Handling) Time (Start → Done): operational speed when actually working.

- Waiting Time: separates team delays from external delays (e.g., approvals, customers, vendors).

- Rework / Ping-Pong: loops (e.g., Dev↔QA) and reopens; these are quality/clarity signals.

- Assignee Time: effort proxy and capacity balancing (who’s quietly carrying load).

- Scope Change & Carryover: predictability; a sanity check on planning and intake.

Make the math fair (so people trust it):

- Use business-hours calendars per team/time zone (don’t punish weekends or holidays).

- Define Start / Pause / Stop once (group statuses and publish the policy).

- Compare like-for-like (same projects/sprints/labels; exclude outliers).

- Save presets so Q1, Q2, Q3, Q4 use identical logic.

Step 3 — Turn Strategy into a Quarterly Flow Plan

A simple cadence beats a heroic kickoff.

Quarterly (2 hours):

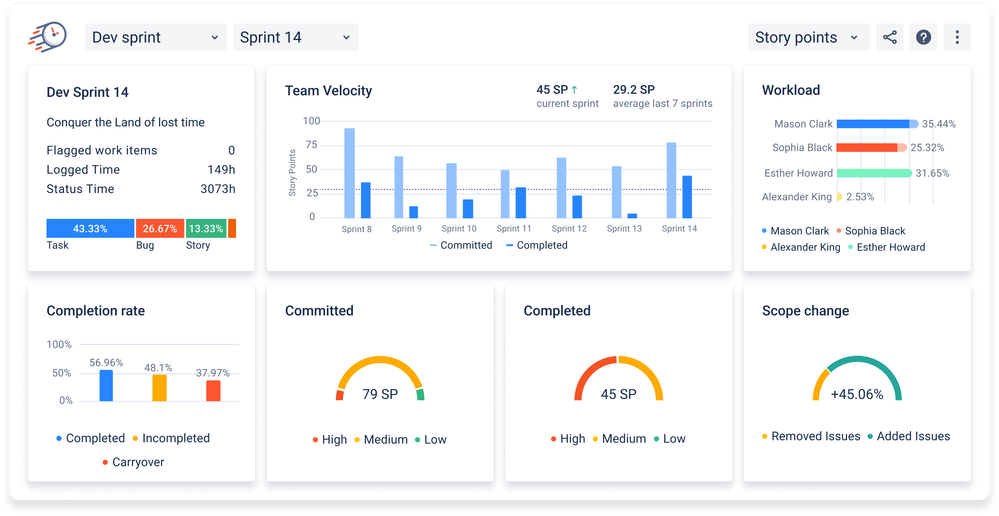

- Outcomes: Commit vs. Completed, Carryover, Scope Change—what surprised us?

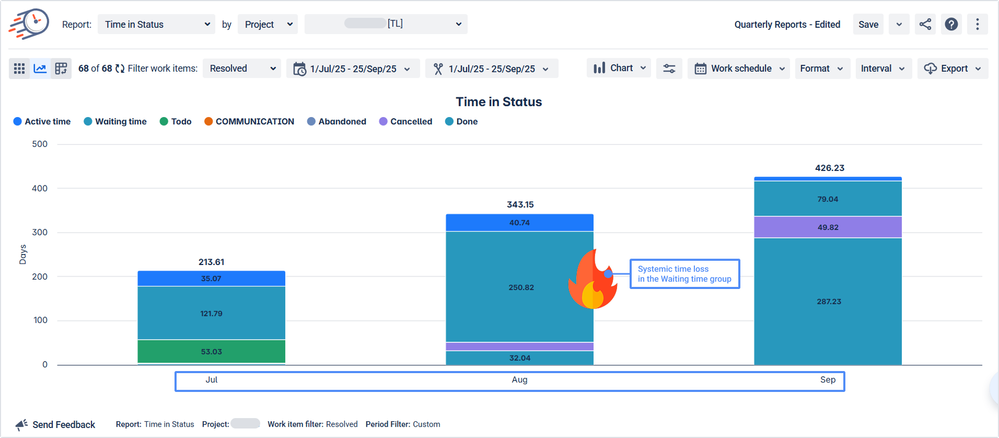

- Flow: Working vs. Waiting split—pick one bottleneck to attack.

- Quality: Reopens and Dev↔QA loops—decide one policy/checklist change.

- Capacity: Assignee Time—rebalance or pair where the load is lopsided.

- Change Check: For any experiment (automation, new gate), run a before/after time comparison on equal scopes.

Monthly (45 min): quick health check—no slide decks.

Weekly (15 min): the 4DX-style “what one thing moves a metric this week?”

Step 4 — Okay, but where do the numbers come from?

From Jira’s issue history. You already record every status change, assignee handoff, and resolution. The only question is: do you want to extract it manually—or let a purpose-built app do the math without asking humans to run timers?

How we pull trustworthy timing from Jira (without babysitting)

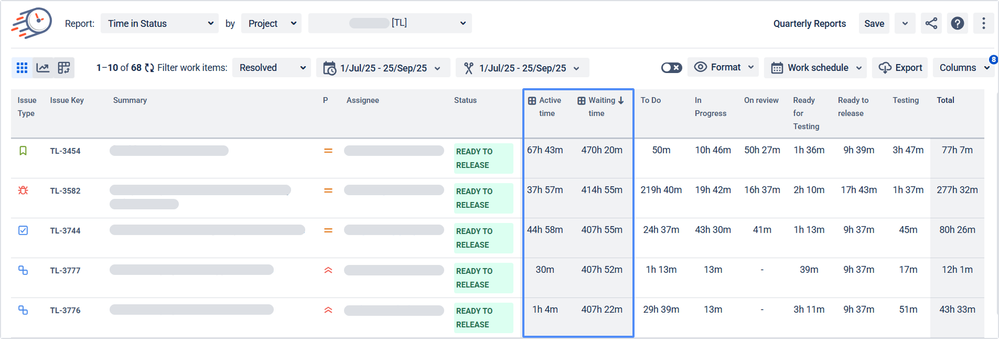

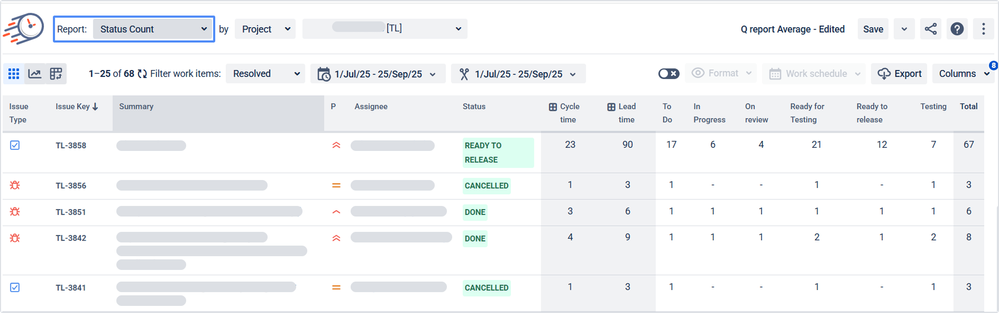

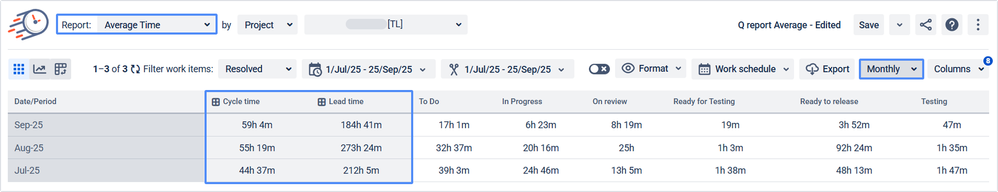

Time in Status app reads issue history and calculates the time work spent in each status—optionally constrained to business hours by team/time zone. That lets you answer strategy questions with clean, repeatable numbers:

- Where do we wait? Group statuses into Working vs. Waiting to split cycle vs. idle time.

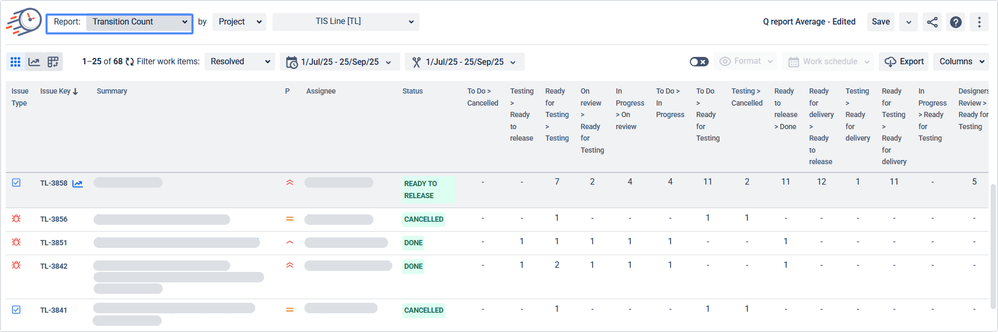

- Where do we rework? Count transitions and reopens to find ping-pong hotspots.

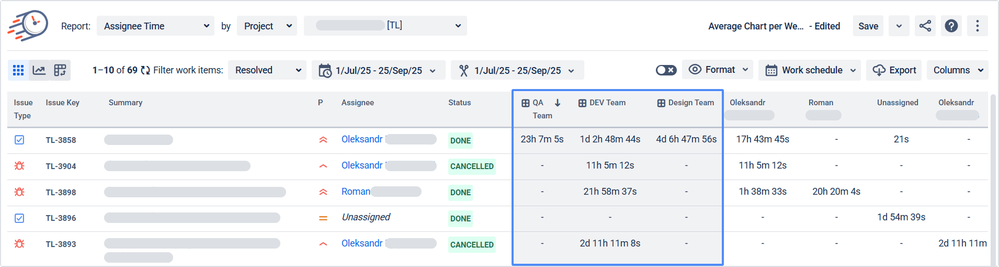

- Who’s overloaded? View Assignee Time to balance effort, not just ticket counts.

- Are we predictable? For Scrum teams, the Sprint Performance Report shows commitment vs. completion, carryover, and scope change, aligned with your estimation method (Story Points, Time, or Issue Count).

- Did change help? Compare before/after periods with the same filters (project, label, JQL) and see if cycle time really dropped.

No stopwatches. No browser hacks. Just the workflow you already use.

Step 5 — A Minimal, Leader-Friendly View (You’ll Actually Open)

Keep the “executive four” to a single page:

- Avg Cycle Time (stacked: Working vs. Waiting)

- Reopens / Dev↔QA Loop Trend (down is good)

- Commitment vs. Completion (and Carryover)

- Assignee Time (capacity & balance)

If your dashboard needs a tour guide, it’s not a dashboard. 😉

Practical Plays (because examples beat promises)

- “We’re slow” → “We wait 42% of the time.”

Group statuses into Working/Waiting. Fix the top two wait buckets (approvals and “Waiting on Customer”) before hiring. - Ping-Pong Patrol.

Use transition counts to spot Dev↔QA loops. Tighten acceptance criteria/test data where loops cluster. Check back in two sprints. - Capacity without finger-pointing.

Assignee Time vs. item count reveals invisible load. High time + low count = complexity; pair up, don’t pile on. - Fair calendars for distributed teams.

Apply business-hours calendars per region. “QA took 48h” becomes “6 business hours.” Trust restored, SLAs honest. - Change that pays for itself.

Trial automation? Label those tickets, run before/after Average Time. Keep what moves the number; kill what doesn’t.

Anti-Patterns to Retire This Year

- Counting everything, learning nothing: totals ≠ truth.

- Spreadsheet archaeology: if reports require exports, they won’t happen after Q1.

- Calendar blindness: weekends & holidays inflate blame.

- “Done” without resolution: breaks lead/resolve time; fix the transition.

- Metric theater: individual callouts first, fixes later—flip it. Coach privately, trend publicly.

The Quiet Payoff

- Teams get fewer surprises and clearer handoffs.

- Leads get honest pacing without micromanagement.

- Executives get predictable delivery and fewer “why was this late?” emails.

- You get a planning cadence that sticks because the numbers are simple, fair, and valuable.

If you want Jira to surface these timings from the history it already has, Time in Status app slots in without changing how people work. We’re happy to help map your statuses to Start / Pause / Stop, wire up business-hour calendars, and set up the “executive four” so your next retro feels like progress—not penance.

Want a quick start?

- Pick one framework (OKRs/Hoshin/V2MOM).

- Choose four flow metrics (Cycle, Wait %, Reopens/Loops, Carryover).

- Lock Start/Pause/Stop and calendars.

- Build the executive four.

- Review monthly, recalibrate quarterly.

That’s it. Strategy, but make it shippable.

Was this helpful?

Thanks!

Iryna Komarnitska_SaaSJet_

0 comments