Community resources

Community resources

Community resources

Deletion operation on .tar file results in "killed" being printed and tar file being incomplete.

I am creating a .tar file base on a docker container and need to update one file (/etc/hosts). Therefore I am running the following commands:

tar --delete -f data.tar etc/hoststar --update -f data.tar etc/hosts

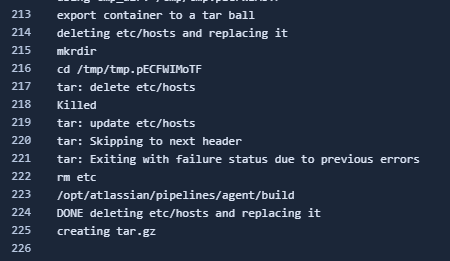

The output I get from the pipeline is the following (and this results in am unfinished tar file):

What could be the issue here?

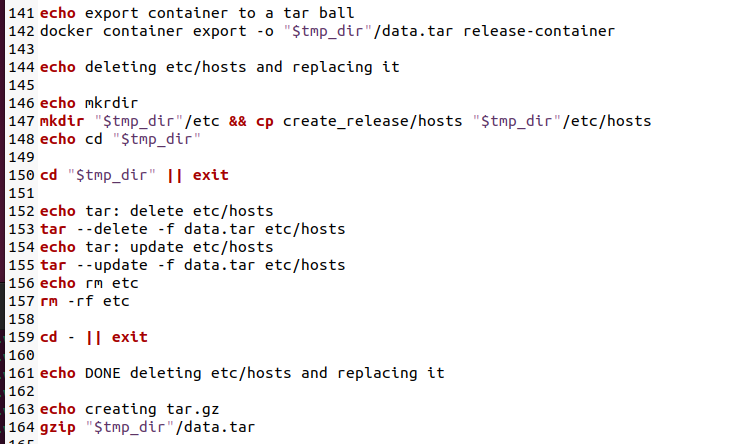

Here you can see a more detailed screenshots of the steps undertaken:

1 answer

Hi Hannes,

In the tar --delete command you provide as argument the file etc/hosts. Is the path of the file correct?

Is your last screenshot from a script that you are executing during a PIpelines build? Can you add the command set -x at the beginning of this script, then run another build and see if that gives additional info about the error?

Kind regards,

Theodora

Hi Theodora,

etc/hosts should exist as when running the script locally it works without crashing.

Adding the command:

tar -tvf data.tar etc/hosts

shows that etc/hosts is part of the archive:

-rwxr-xr-x 0/0 0 2023-06-05 15:41 etc/hosts

The screenshot shows the part of a script that concerns the tar file. The tar is created by exporting a docker container, then doing modifications to the tar file and finally, compressing it with gzip.

After set -x I can see that the commands I expect are run but no additional output to give more information on why killed is printed is shown.

Thanks and best regards

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Hi Hannes,

Thank you for the info. Here are some additional thoughts:

(1) The process may be killed because there is not enough memory. You can add the following commands at the beginning of the script in your bitbucket-pipelines.yml file:

- while true; do date && ps -aux && sleep 5 && echo ""; done &

- while true; do date && echo "Memory usage in megabytes:" && echo $((`cat /sys/fs/cgroup/memory/memory.memsw.usage_in_bytes | awk '{print $1}'`/1048576)) && echo "" && sleep 5; done &

These will print memory usage per process in the Pipelines build log and you can see how much memory is used in total and if it's close to the limit.

You can see info about memory limits here:

If it looks like memory usage is close to the limit, and if your step is a regular step (without the 2x parameter), you can try adding size: 2x to the step to give it more memory. Please keep in mind that 2x steps use twice the number of build minutes of a 1x step.

(2) You can try adding the -v argument in the tar --delete command in case that prints any more useful info.

(3) You can try defining the data.tar file as an artifact, then download it and try to execute the tar --delete command locally. This is to figure out if by any chance there is something wrong with the tar file generated during the build.

Please keep in mind that only files that are in the BITBUCKET_CLONE_DIR at the end of a step can be configured as artifacts, so you would need to copy the file there if you generate it in a directory outside the BITBUCKET_CLONE_DIR.

For this scenario, It would also be useful to have the version of the file prior to the tar --delete command, so you could add a command in your script before tar --delete that copies the file in a different location inside the BITBUCKET_CLONE_DIR or with a different name.

Kind regards,

Theodora

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Hi Theodora,

thank you for your reply. Using the commands provided in (1) I was able to figure out that the memory used by tar --delete is more than the runners have. Unfortunately also the 2x runners run out of memory. This is the reason why I did not early notice that this is the issue.

Unfortunately for now I do not have a real solution to this issue yet.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Hello @Hannes Kuchelmeister ,

If you are using Linux Docker self-hosted runners, they can be configured to use 1x, 2x, 3x and 4x which will result in 4GB, 8GB, 16GB and 32GB of memory allocated to the runner respectively. It's important to note that the runner's host machine should have that memory available.

Another option you might want to try is using Linux Shell runners. These runners are executed directly in a terminal session of Linux (with no docker involved) and can use the total resources available for the host. You can check the instructions in Setup Linux shell runner for details on how to install and use a Linux shell runner.

Thank you, @Hannes Kuchelmeister !

Patrik S

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

I managed to reduce memory usage by piping docker container export directly into tar --delete like this:

# export container and delete hosts file

docker container export release-container | tar --delete etc/hosts > "$tmp_dir"/data.tar

# add new hosts file:

tar --append -v -f data.tar etc/hosts

gzip "$tmp_dir"/data.tar

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Was this helpful?

Thanks!

DEPLOYMENT TYPE

CLOUDAtlassian Community Events

- FAQ

- Community Guidelines

- About

- Privacy policy

- Notice at Collection

- Terms of use

- © 2024 Atlassian

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.