Community resources

Community resources

Community resources

BitBucket Installation Issue on Kubernetes - EKS

With EFS

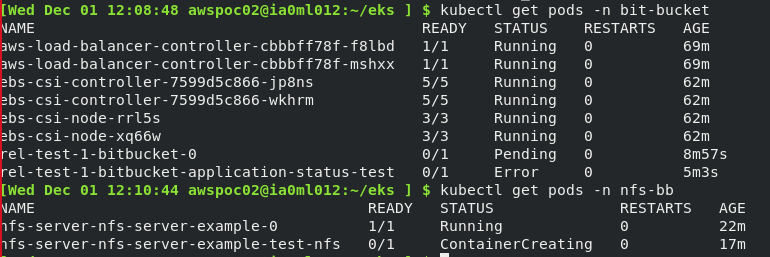

I am trying to deploy bitbucket datacenter with cluster disabled on EKS using Helm charts. I have the EKS cluster up and running in AWS. The EKS cluster has an ingress controller, coreDns and other workloads required for this deployment. However, when I install bit bucket helm chart, the test-shared-home-permissions fail. I am using EFS as shared home. Here is the link to this test. I have the followed the documentation provided here.

With NFS

After reading different post on EFS, I thought to give a try with NFS. However, when I install bit bucket helm chart, the test-application-status fail. I am using NFS as shared home. I am able to access the NFS from another machine and it seems to work properly. Here is the link to this test. As part of the application status test, its trying to get the status of the application which its not able to get and it fails. I have the followed the documentation provided here. The bit bucket pod is always in pending status.

@Himanshu Sinha it's really difficult to say what's wrong without logs. Did you enable nfs permission fixer? I expect this init container to fail first if there are permissions issues in the volume.

By the way, EFS isn't officially supported as storage backend for Bitbucket due to performance issues.

As to NFS, I guess the app just failed to start because it wasn't able to write to shared home. Providing logs and kubectl get pods -n $namespace will help.

nfs permission fixer is enabled. Yes, the app failed to start. It seems to be related to shared home - nfs.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

The fact that the application status test is not passing and the pod is in a pending state suggests that there could possibly also be something else (other than shared storage) preventing the BB pod from starting. There are a few things you should confirm first:

- Ensure that all of the required prerequisites are in place.

- Ensure that the values.yaml has been configured correctly based on these prerequisites.

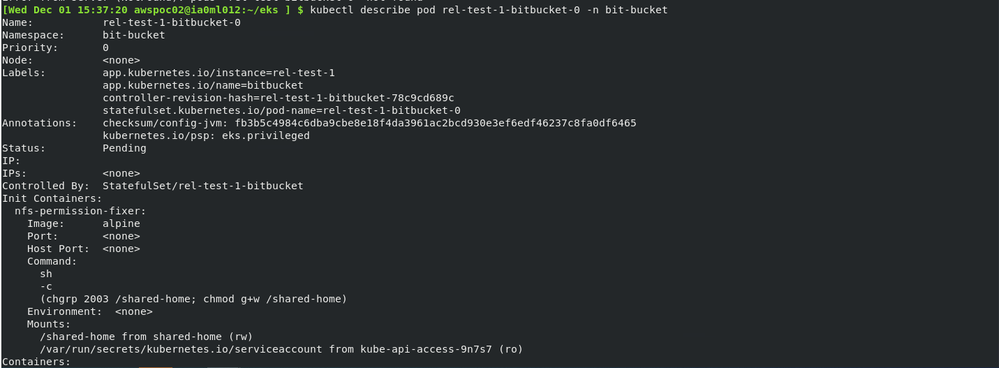

Having done this, and after re-deploying, if the pod is still in a pending state then you can use the following command to provide additional detail as to why the pod is in this state:

kubectl describe pod <pod_name>

This should hopefully reveal what the issue is.

Dylan.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

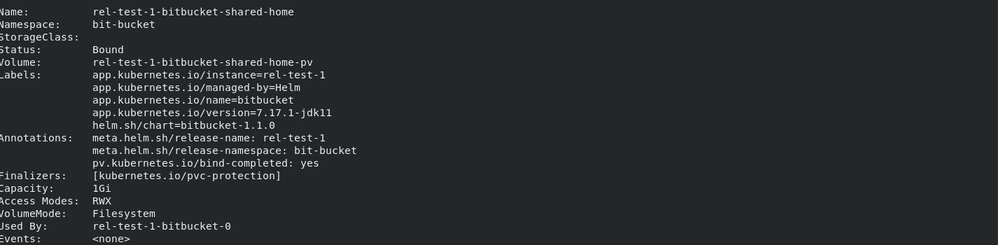

Ah, I missed that the pod is in pending state. Odds are the PVC has never been bound and the pod has unbound PVCs. Let's take a look at events in kubectl describe. I'd also look at kubectl describe pvc too.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

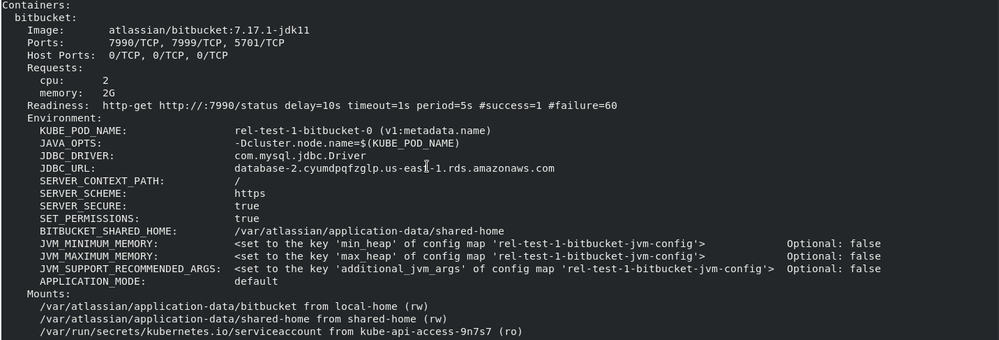

Here is the output from kubectl describe.

From the kubectl pods output attached above, I believe all the prerequisites are in place.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

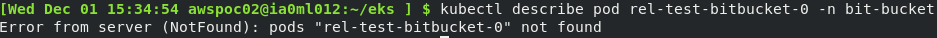

@Himanshu Sinha can you please provide output from kubectl describe pod rel-test-bitbucket-0 -n bit-bucket? Events in there will shed some light

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

@Himanshu Sinha sorry, wrong pod name, it's rel-test-1-bitbucket-0 - the one that is in a Pending state.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Hey @Himanshu Sinha

from the describe output you supplied for the PVC I cannot see anything obvious that would prevent the pod from starting.

I think the next step is to verify the NFS and its associated PVC. To do this you can run a simple pod (remove BB from the equation) which mounts the PVC you've created for the NFS as a volume, something like this:

apiVersion: v1

kind: Pod

metadata:

name: shared-home-browser

spec:

containers:

- name: browser

image: debian:stable-slim

volumeMounts:

- mountPath: /shared-home

name: shared-home

command: [ "bash", "-c", "--" ]

args: [ "while true; do sleep 30; done;" ]

volumes:

- name: shared-home

persistentVolumeClaim:

claimName: bit-bucket

If this is successful and you can exec onto the pod and navigate to the mount path, /shared-home, then this will:

- Confirm the NFS is setup correctly

- May indicate a misconfiguration with the Bitbucket pod

It might also be worth performing a describe on the pod running the shared home test - "nfs-server-nfs-server-example-test-nfs"

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

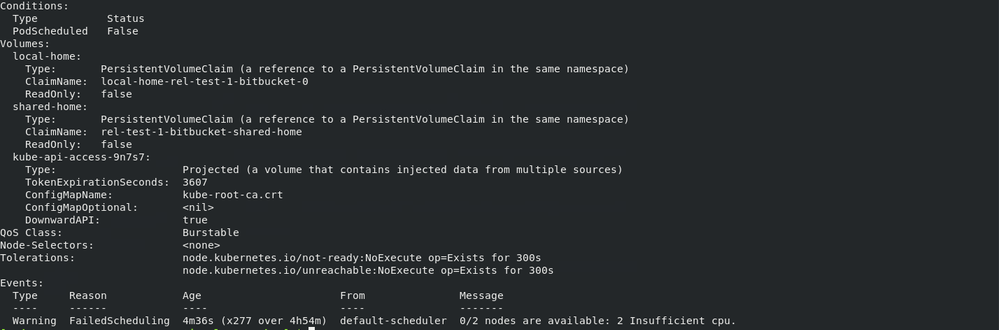

@Dylan Rathbone Before I tried the next step here is the output as suggested by @Yevhen

I was using t3.medium. Then I tried with m5.large and still the same issue - Insufficient CPU.

I did kubectl describe node and there doesn't seem to be CPU issue.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

So an m5.large only has 2 cpu's and the default cpu request size in the values.yaml is 2...

container:

requests:

cpu: "2"

My understanding is that K8s needs to allocate some compute for itself so you cannot allocate the full 2 cpu's to your pod. Try dropping the request size down to 1.75 or 1 and see what happens

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Here is pod resource request

jvm:

maxHeap: "1g"

minHeap: "512m"

container:

requests:

cpu: "1" -- Tried with "1.75" as well. It didn't help

memory: "2G"

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

@Himanshu Sinha experiment with cpu request. So far, it's purely K8s issue - the cluster does not have a worker node with enough cpu. And yes, if the node has 2 vcpu it does not mean all of them are allocatable. Try with a small request like 500m for example.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

I did experiment. However, it didn't help. Will try it without EKS so see if it helps.

Thanks @Yevhen and @Dylan Rathbone for prompt response.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

@Himanshu Sinha I can only recommend looking at your available worker node capacity and trying the smallest cpu request possible - say 10m. Perhaps, you have other workloads that reserve cpu resources?

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Was this helpful?

Thanks!

- FAQ

- Community Guidelines

- About

- Privacy policy

- Notice at Collection

- Terms of use

- © 2024 Atlassian

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.