Community resources

Community resources

- Community

- Products

- Jira Align

- Articles

- Quarterly Business Review(QBR) with Jira Align

Quarterly Business Review(QBR) with Jira Align

Quarterly Business Review(some basics first)

What: Integrated Demo of features delivered over the course of Quarter/PI

When: End of PI, different than regular system or sprint demo/review

How: It’s presented with working software, not PowerPoint

Who: Business leaders, Portfolio managers, Epic owners ( of course ART)

Key Suggestion from experience

Keep time for feedback after the demo. Feedback from stakeholders could include:

- If demo met their expectation?

- Did they see something that they didn’t expect?

- If they got more than they expected? :-)

How does it all begin?

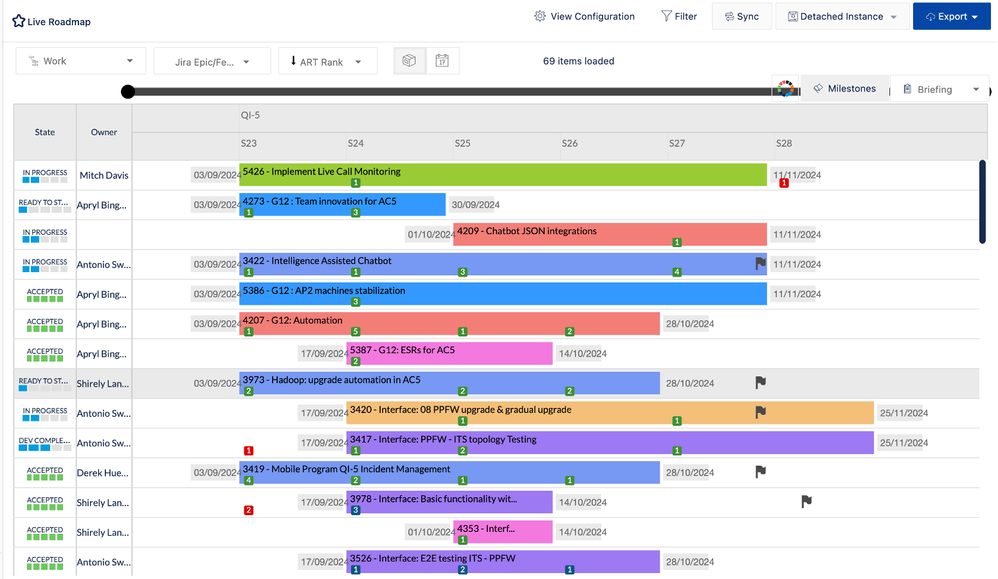

We start presenting the plan we started with in the first place, talking through the accomplishments we made as part of our journey, which might have started a couple of quarters ago, and eventually achieved in the current quarter which is about to end.

Via the Roadmap how many features were we able to accomplish, and which road blockers left us stranded?

As the next step, we start the integrated demo! Now here is the challenge, we have a mixed bag of stakeholders some functional, some technical, some corporate folks. Now how to present a demo that can be understood by all, not just a technical demo, not even a PowerPoint, but trying to present a solution from the end user, a real consumer perspective, who could even be an internal user within the organization.

How to Enrich Experience: Simulate the feature and demonstrate the solution, just as if customers are actually using the solution.

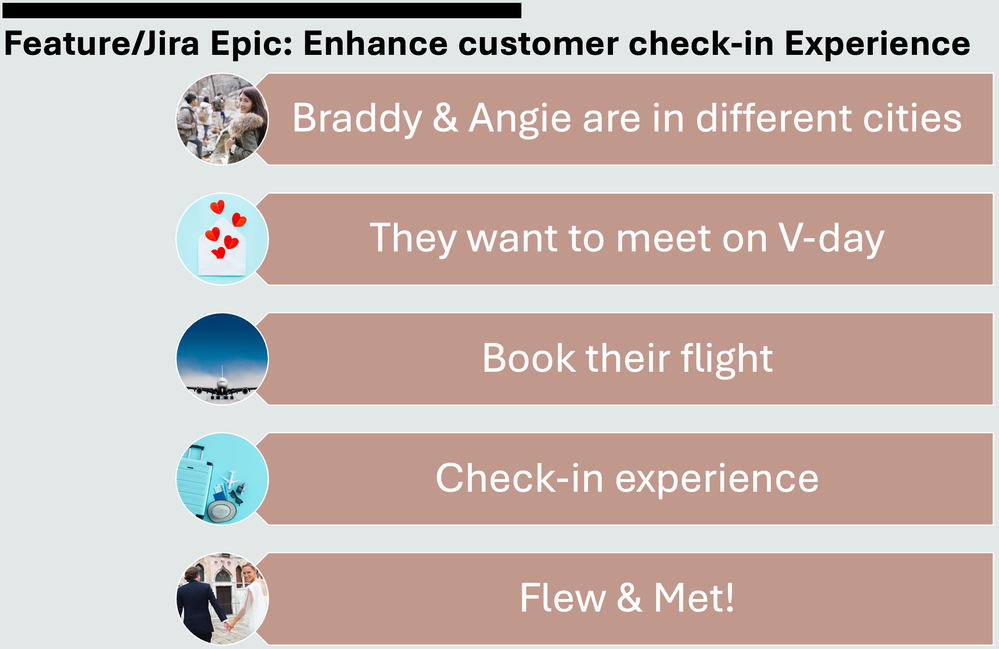

Let's say we are trying to demonstrate a feature that pertains to enhancing customer check-in experience.

Braddy and Angie are soulmates who are busy working in different cities. Braddy wants to surprise Angie to make her feel special, awww! So he tries to book a flight which lets him book it in just 3 simple steps.

Next, he boarded the taxi, which was again arranged via the flight booking page.

While on the way to the airport the chauffeur, asks "Which flight, Sir?"

"Oh! heard some great things about them from another passenger, good luck sir, have a nice flight!", as Braddy deboards the taxi.

At the airport, he just goes to the check-in kiosk, and from there straight to the boarding area, that's a pleasant change, exclaims Braddy!

Eventually, he is able to surprise Angie, all thanks to an easy and convenient customer journey.

Moving forward, to Quantitative and qualitative measurement

PI system demo focuses on the Product perspective, while Quantitative and qualitative measurement focuses on the Process perspective.

After PI, stakeholders are interested to know

- “Where are we with PI objectives?”

- “Did we achieve our commitments?”

- “How are we with automation?”

The data alone isn’t of great value. The value add is to understand what the metrics are stating and then utilize them to provide relevant context.

Example: We are 70% predictable! vs We are 70% predictable since were are working on too many topics at the same time.

- Portfolio leaders might avoid accountability and point the teams for not being able to deliver Features rather than identifying the real problem.

- If you want to find solutions that work, then you have to find the real story, or why you only made 70% predictability.

- For RTEs, this is the moment to take a stand for the ART, but for that, you need factual data and context!

Note: Quantitative Measurement isn’t going to bring negative health updates. If there is transparency and you are executing your plans well then it's not a health update, it's just factual data.

How Jira Align can enable this?

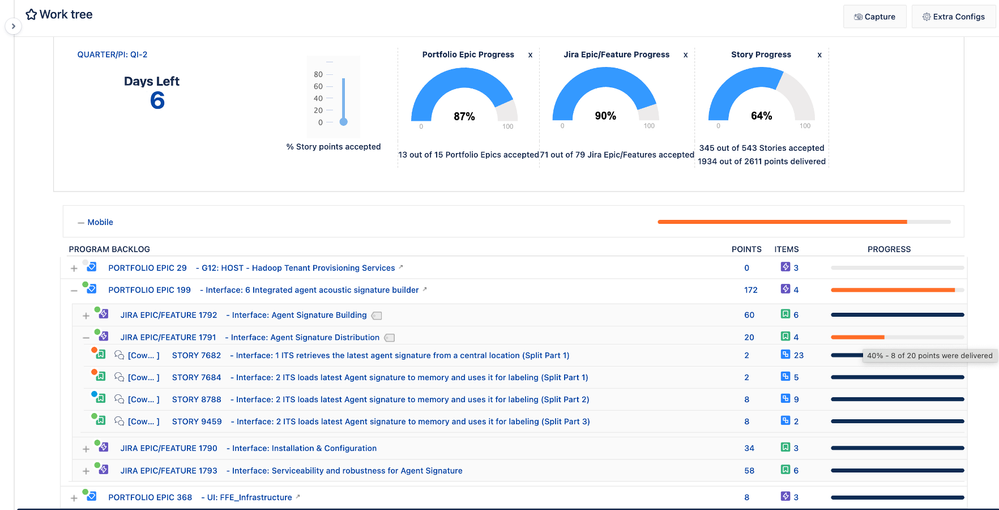

Within a single view, you can see the number of days left in the current PI, as obviously, we are in the Innovation & Planning Sprint, in case you need to know more about it, you can read here.

You can also view,

- how many Portfolio Epics and features were accepted

- how many stories were accepted

- how many story points were delivered across the ART

If need be, you can deep dive to look into the progress of unaccomplished Epics, narrowing it down to feature and story-level progress as well in a nested view.

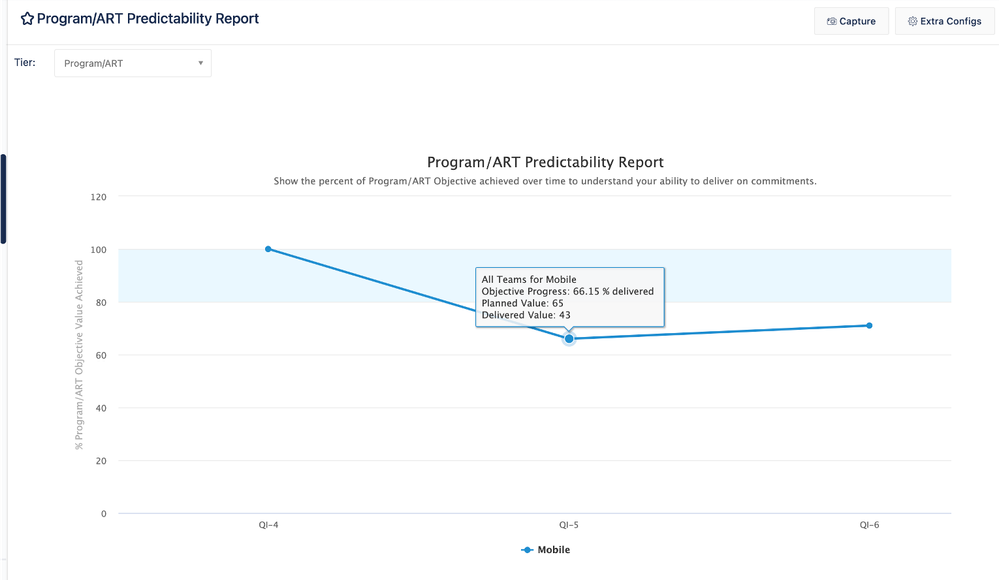

Program Predictability Report ( in Jira Align)

The PI objectives created during the PI planning are based on the planned business value attributed to them by the stakeholders and eventually, the accepted business value towards the end of PI leads to the ART predictability score which shows objectives progress in terms of how much functionality has been delivered, which can be compared at the PI level.

There could be reasons which might not be showcasing the actual information.

Following factors might have contributed to data inconsistency, which should be checked before closure of PI.

- Objectives Not Completed

- Risks That Are Open

- Impediments That Are Open

- Stories Not Accepted

- Features Not Accepted

- Open Action Items

- Epics Not Accepted

- Dependencies Not Delivered

- Orphan Features

- Orphan Stories

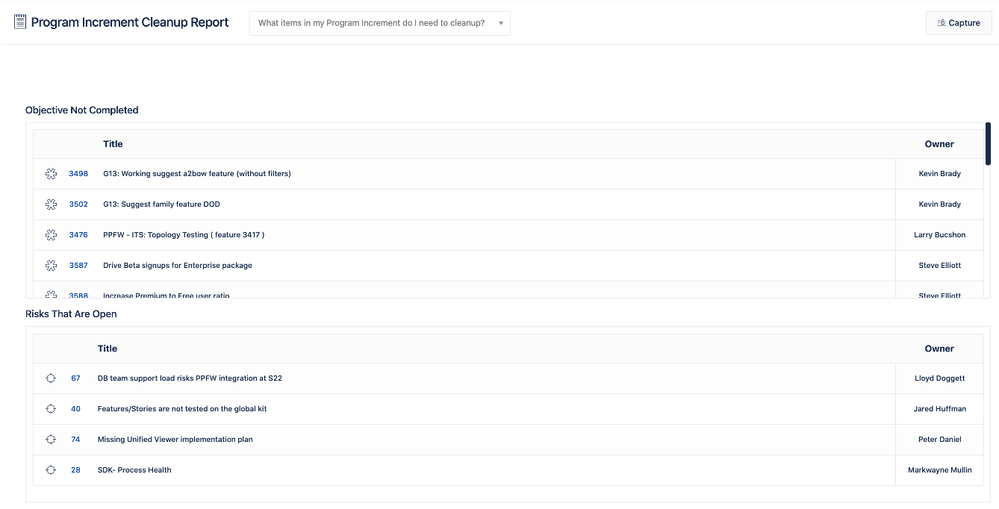

Program Increment cleanup report(in Jira Align) can help state the areas of attention, which would like to realistic Program Predictability score

What could be other early signs, that could have been missed!

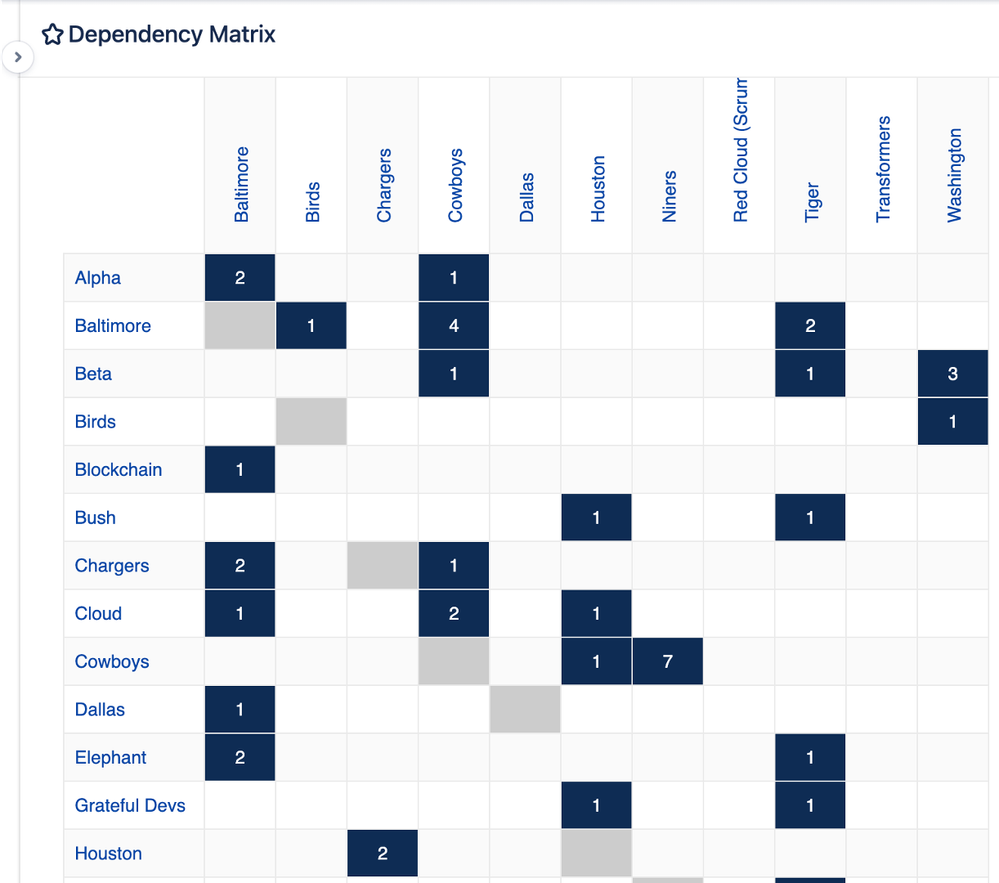

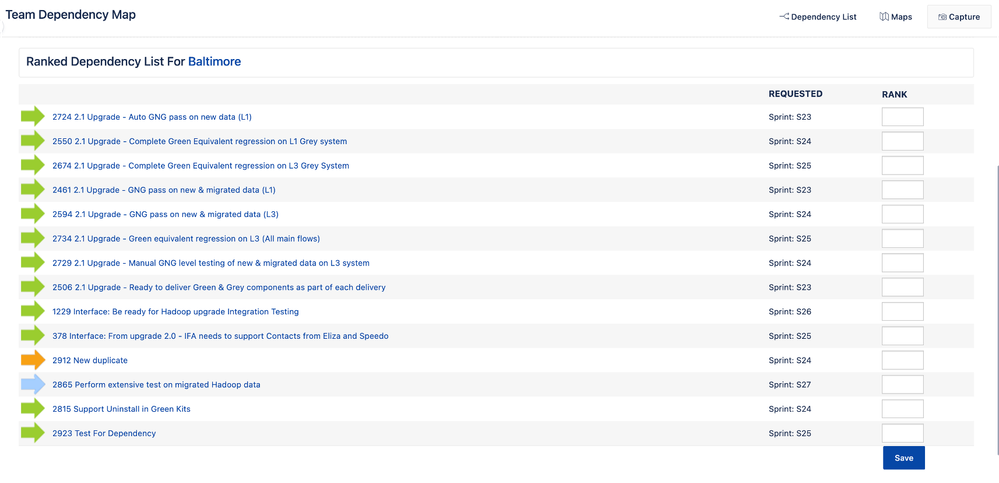

Have a look at the below dependency matrix, what's your first observation?

Yes you have spotted it well, Team "Cowboys" and Team "Niners" have 7 dependencies with each other. What's going on here? This would perhaps need to be investigated. Maybe it's a skill shortage in one of the teams, which is making other team dependent, and a little resource restructuring might resolve the problem. However there could be other reasons as well. The point here being the RTE needs to be on top of these observations, to make teams as independent as possible, where Dependency Matrix can play a role.

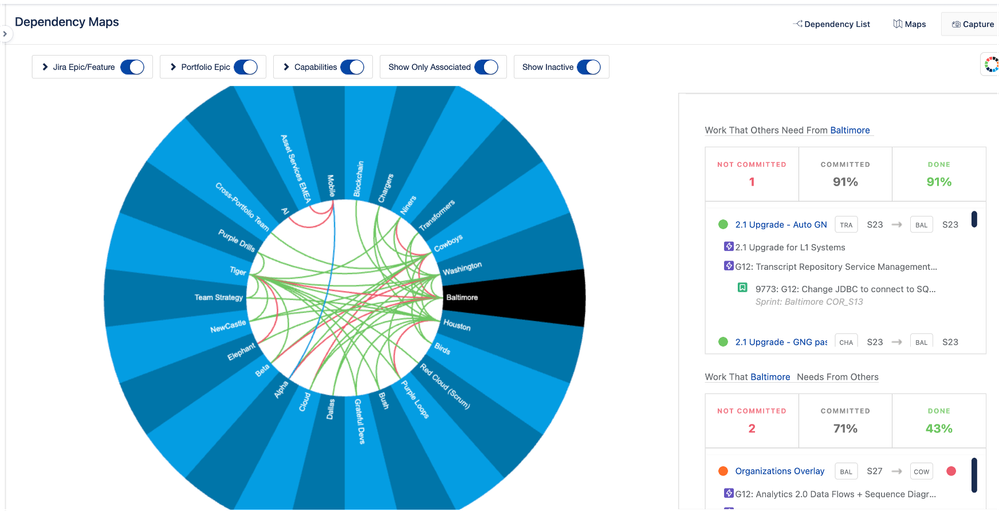

Isn’t spider web, a spiderman thing!

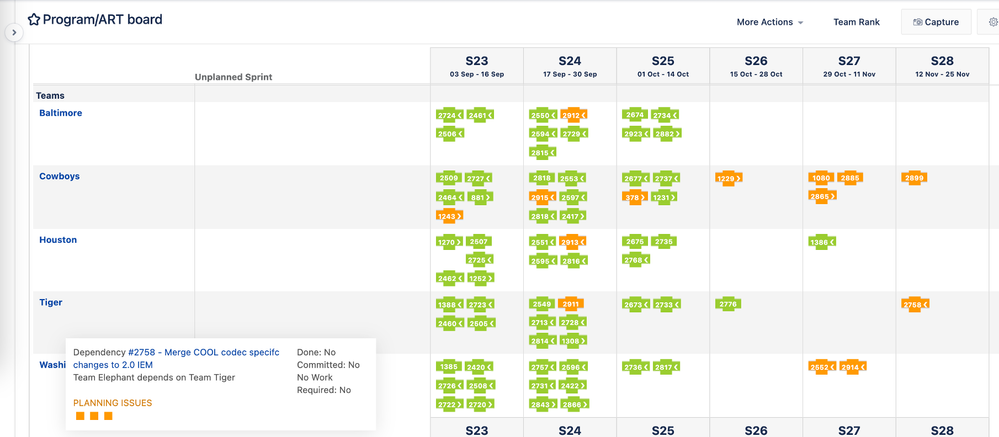

Looking at another view of dependency mapping exposes another potential problem area. Observation that can be made here is Teams like Newcastle, Elephant, Beta having fair set of dependencies but Teams like Cowboys, Washington, Baltimore, Houston they have so many dependencies, which make me wonder of they work on PI commitments or only on the dependencies across the PI.

One might want to filter out the dependency map based on Features, Portfolio Epics amongst others to deep dive further. Yet another important area to investigate which might be disrupting the ART predictability score in terms of deliverables, eventually impacting the Business Value.

Another area that it exposed was teams with so many dependencies during PI. During the PI planning, teams generally tend to align and map dependencies to a sprint in which they intend to resolve, but as the PI planning conclude it might results in multiple dependencies in a particular sprint or a particular team. Now how does team figure out which dependency to resolve first, basically what's the priority!

Yet another strong element is the team dependency map, which provides an platform for the decision maker to mark ranking of dependencies which are attributed to a specific sprint.

This isn't solution of the problem, because it isn't fixing the root cause. How and when to identify it is important, so as to take a rational view point on this.

This has always been around, but perhaps not in focus, yes the program board, back into the physical presence PI planning event, remembers the big brown sheets put together with Sprints on one axis and teams on another, with sticky notes falling over a while, and mess up with strings of wool, yeah nostalgia. Fast forward to the present era, with geographically distributed teams, the need for having a digital program board was eminent. The Program Board in Jira Align presents the same view and data can be filtered based on what you wish to see, dependencies, milestones, feature target sprint, PI objectives, or all of them in one glance.

So the problem that we were trying to resolve is when we identify that the team is overloaded with dependencies in a particular sprint, that can be done even during the PI planning event itself, rather than learning about the problem at a later moment trying to resolve it, while we could have mitigated it at the PI planning itself.

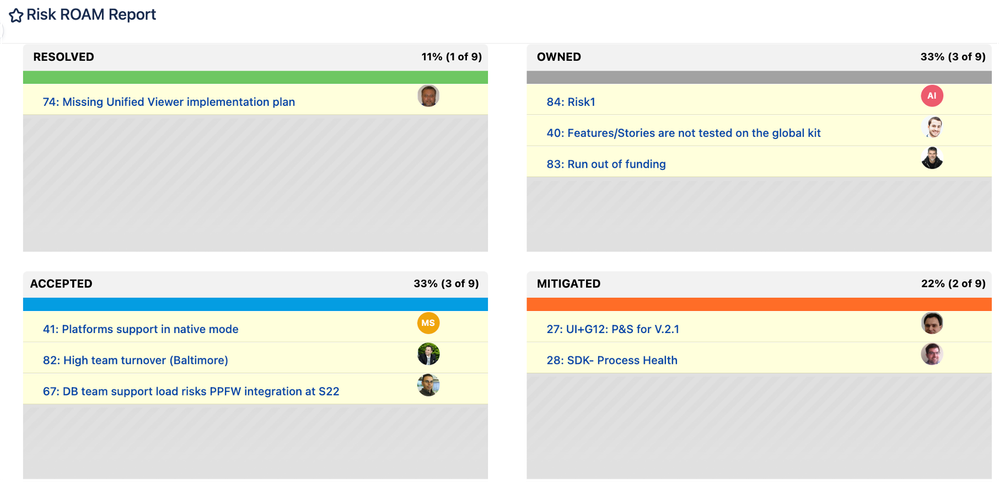

Please note this is problem mitigation, resolution will be finding the root cause why so many dependencies, and there might be rational reasons for it, then still its problem acceptance, still not a resolution. With this thought process of Resolution, Ownership, Acceptance & Mitigation comes risk management.

Risk management is as crucial as Dependency management, which can be visualized in the following manner in Jira Align

Retrospective and problem-solving workshop

After witnessing a couple of problem-solving workshops at the quarterly business reviews, my observation is that it's not a problem-solving workshop, rather it's a PI-SE.

Problem Identification and Solution Exploration, let's see why.

The problems proposed during this workshop should be

- experienced cross-team and are usually systemic

The root causes of these problems often lie in

- culture, process, or environment.

- Practically, these would take time to properly solve

The majority of the time in the workshop is spent to identify the root causes

Even with less time, groups tend to

- Brainstorm multiple possible solutions and vote to get the prioritized list

- The first step in the solution is to explore the problem more

Problem-solving workshop

- Raises problems to the surface and gets the conversation started

The “solving” often takes some more time, coordination, and prioritization

A couple of other views that could resolve other problems emerging from this workshop are:

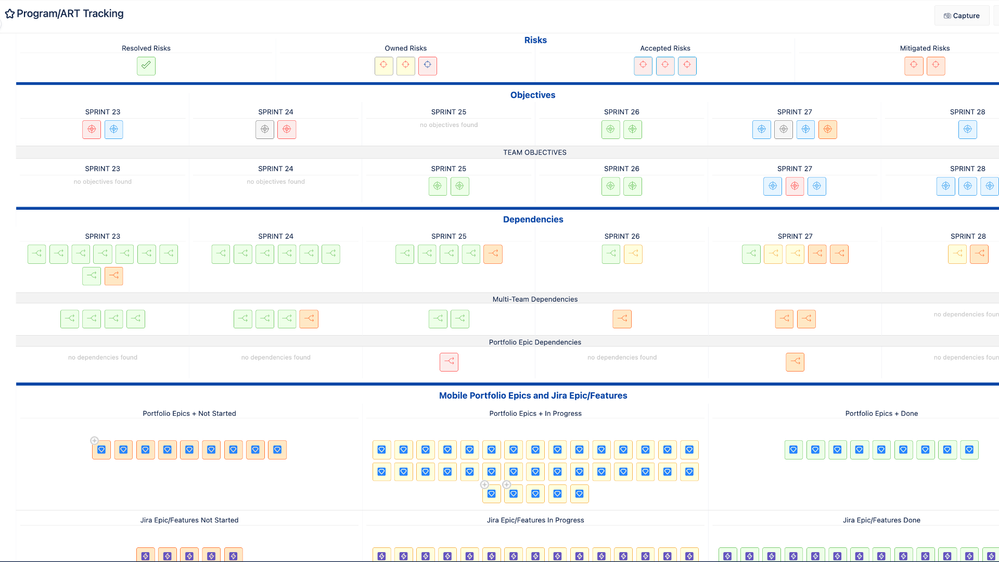

1. Program tracking: The program tracking report provides a one-page view of the status of all project management objects, including risks, dependencies, and objectives.

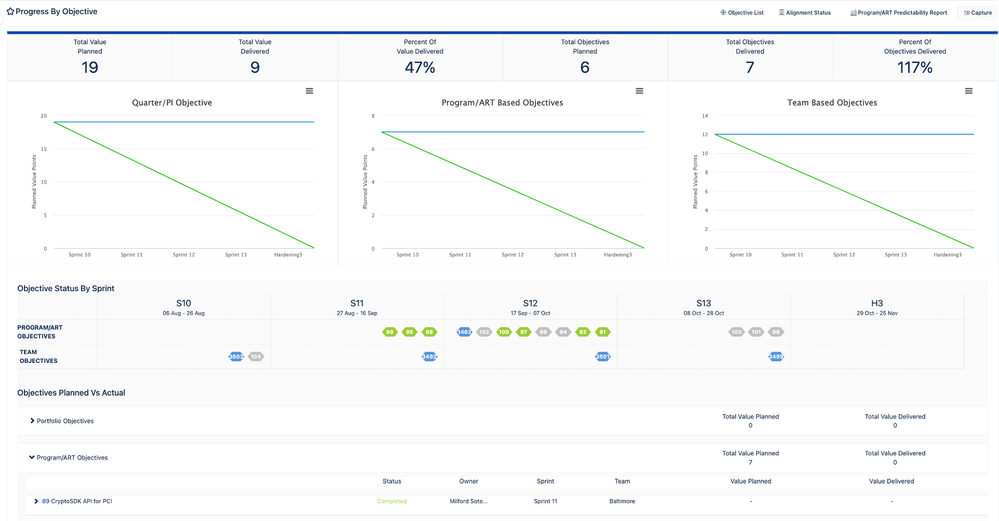

2. The progress by objective report captures the status of the key objectives at all levels, including the blocked and in-progress items. Objectives are specific business and technical goals for the upcoming program increment (PI).

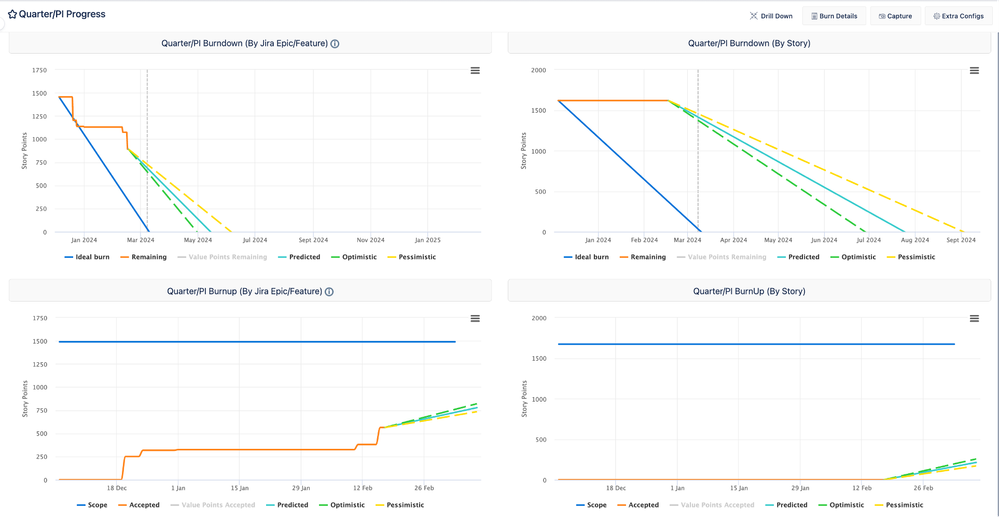

3. A burndown chart represents work remaining versus time; the outstanding work is on the vertical axis and time is on the horizontal axis. These charts are especially useful for Portfolio Managers and Release Train Engineers for predicting when all work will be completed; it provides a high-level understanding of whether the work for a PI is on track.

4. The program increment scope change report captures features that have been added and/or removed during a PI. It provides a snapshot of features that were in scope at the beginning of a PI and allows you to track all changes and view the current features in scope. This report also shows how estimates may have changed on stories and features during the PI. The chart is especially useful for Portfolio Managers and Release Train Engineers to understand scope changes during a PI.

Was this helpful?

Thanks!

Karan Madaan

About this author

Senior Enterprise Solution Strategist

1 accepted answer

Atlassian Community Events

- FAQ

- Community Guidelines

- About

- Privacy policy

- Notice at Collection

- Terms of use

- © 2024 Atlassian

0 comments