Community resources

Community resources

Can custom pipes read repository variables?

Hi,

I have written a custom pipe that accepts variables. If I explicitly pass the variables to the pipe in my bitbucket-pipelines.yml file, it works as expected. However, from some of the documentation I have read online (specifically this one - see Default Variables section), it suggests that it should be able to read the variables from the context.

There is a good chance that I have misunderstood what this means. I am assuming that any environment variable that I have defined at the account, repository or deployment level would get passed into the pipe when I define a variable in the pipe.yml and default it to the environment variable - as shown on that linked article. However, if I do not explicitly pass the variable into the pipe then it doesn't work.

Is there a step I have missed, or something I have not enabled etc to get this to work? Or I have misunderstood the feature and it is not possible. Any help would be greatly appreciated.

Here is what I have put together.

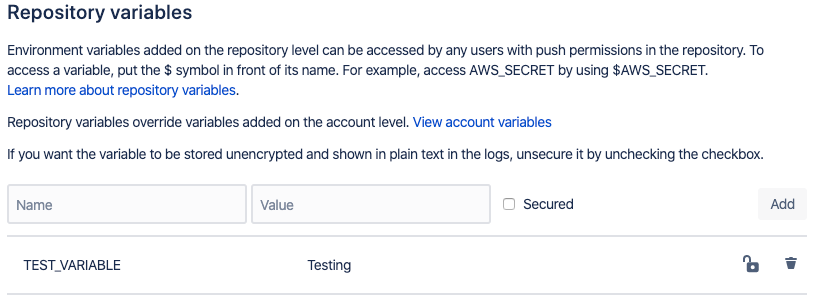

I have created the repository variable.

Custom Pipe:

Dockerfile

FROM alpine:3.11

RUN apk --update --no-cache add \

bash

RUN wget -P / https://bitbucket.org/bitbucketpipelines/bitbucket-pipes-toolkit-bash/raw/0.4.0/common.sh

COPY ./pipe.sh ./pipe.yml /

ENTRYPOINT ["/pipe.sh"]

pipe.sh

#!/usr/bin/env bash

#

# Echos the test variable

#

# Required globals:

# TEST_VARIABLE

#

source "$(dirname "$0")/common.sh"

# mandatory parameters

TEST_VARIABLE=${TEST_VARIABLE:?'TEST_VARIABLE variable is missing.'}

echo ${TEST_VARIABLE}

success 'Test variable has been passed through'

pipe.yml

name: CustomPipe

variables:

- name: TEST_VARIABLE

default: '${TEST_VARIABLE}'

Pipeline:

bitbucket-pipelines.yml - This version works

image:

name: atlassian/default-image:2

pipelines:

branches:

master:

- step:

name: Run pipe

script:

- pipe: <url for pipe>

variables:

TEST_VARIABLE: ${TEST_VARIABLE}

This succeeds echoing the value in the variable and displays the success message:

Testing✔

Test variable has been passed through

bitbucket-pipelines.yml - My assumption is that this version should work too, but it fails

image:

name: atlassian/default-image:2

pipelines:

branches:

master:

- step:

name: Run pipe

script:

- pipe: <url for pipe>

I get this failure message as per the pipe.sh:

/pipe.sh: line 12: TEST_VARIABLE: TEST_VARIABLE variable is missing.

3 answers

1 accepted

I have managed to figure out where I have been going wrong. It is not clear in the documentation that the pipe.yml will only get used if you use the bitbucket repo to invoke the pipe instead of going directly to the registry.

In my example above I showed `<url_for_pipe>`. In my case I was pulling the pipe directly from AWS ECR. Once I changed this to point to my bitbucket repo instead it then started using the defaults defined in pipe.yml. The two different ways of calling your pipe are described in step 11 in the how to write a pipe for Bitbucket Pipelines article. Once I started calling them as per point 2, it started working for me. It would be useful if the documentation explained the implications of using one way over versus other.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Hi @Ming Han Chung,

So there are two ways that you can invoke your pipe from within the bitbucket-pipelines.yml file. The first way is to specify the docker url which pulls it directly from the docker registry (or your own such as AWS ECR) and spins up the container. The second way is to specify the name of your bitbucket repository for the pipe. The pipe.yml file will only be consumed when you use the second option - point it to your pipe repository.

I will use an example to try and demonstrate this. Lets assume we have a pipe called my-custom-pipe that is defined in the acme/my-custom-pipe-repo bitbucket repository (acme just being the company name/workspace, my-custom-pipe-repo is the repository name). We build our pipe, as per the documentation, ensuring that the pipe.yml fie is in the root of the bitbucket repository. For simplicity sake we will assume the pipe image is built and deployed to docker hub under the account acme and repository my-custom-pipe-docker. We write the pipe.yml like so:

name: my-custom-pipe

image: acme/my-custom-pipe-docker:1.0.0

repository: acme/my-custom-pipe-repo

variables:

- name: DEBUG

default: 'False'

- name: AWS_ACCESS_KEY_ID

default: "${AWS_ACCESS_KEY_ID}"

The two main things to talk about in here are the image and the variables. The image is the docker hub url for the container (including the docker tag). The build pipeline will read this and go and get the container from this location. We have defined two variables and both of these have defaults set. The DEBUG variable is defaulted to 'False', but the AWS_ACCESS_KEY_ID variable is set to read an environment variable. If this variable has been set against your account, the repository and somewhere else in your bitbucket-pipelines.yml file, it will get pulled through and used. If it does not exist, it will be empty (null).

Now it's time to consume our pipe. We have a separate application that we are working on that we want to use our new pipe during the build. We define our bitbucket-pipelines.yml file like so:

// ...

pipelines:

branches:

master:

- step:

name: A step using our new pipe

script:

- pipe: acme/my-custom-pipe-repo:1.0.0

variables:

DEBUG: 'true'

In this case we have said to obtain the pipe by going to the bitbucket repository instead of going directly to docker to get it. This was the bit I missed when I first tried building my own pipe. This way the pipe.yml in the root of the pipe's bitbucket repository gets read and is used to go and get the container using the URL defined in the pipe.yml file. It also reads all of the variables you have defined along with the defaults that you have specified and attempts to load any defaults you have specified and passes them through to the pipe instance. In our example above, we have set the value of the DEBUG variable, but left the AWS_ACCESS_KEY_ID to be defaulted because we have defined that using an account variable in bitbucket. Take note that we are specifying a version number (or tag) at the end of our bitbucket repository - 1.0.0. This can be either a tag or a branch in your repository.

This should all be working now as you would expect.

Let's look at the other way and understand why it doesn't work. Let's assume we set the bitbucket-pipelines.yml like this:

pipelines:

branches:

master:

- step:

name: A step using our new pipe

script:

- pipe: docker://acme/my-custom-pipe-docker:1.0.0

variables:

DEBUG: 'true'

In this case, the build pipeline is going to pull the container directly from docker and not go to our repository and our defaulted values will not be set. The reason (I believe) that this does not work is because the pipeline never reads the pipe.yml file, even if you have added it to your docker image. This is because the defaults need to be defined at the point of spinning up the container. My guess is that they are being set as environment variables against the docker container at the point it is being spun-up. Therefore, it would be too late to try and read the pipe.yml file from within the container. The only way it can work is by the pipeline knowing how to default your variables before it starts the container, and the only way it can do that is by looking in your bitbucket repository and reading the file.

I hope that all makes sense.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Thank you so much. This really clears it up as I was told something different by Atlassian and it didn't work.

My last question is when you say you can call by tag or by branch. to call by branch would it be like

acme/my-custom-pipe-repo:master

The reason is I don't want to go to every single repo that uses that pipe to update the tag number every time i change the pipe and up the version (which is automatic for the most part as part of the docker upload)

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Hi @Ming Han Chung. No worries. I'm glad it helped you.

Yes you can set it to point to master, as you have shown in your example, and it will work.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Hi,

today I wrote a Custom Pipe and encountered the same question.

A Custom Pipe is subclassing bitbucket_pipes_toolkit.Pipe which itself has and env property.

This is a Dict-like object containing pipe parameters. This is usually the environment variables that you get from os.environ.

class

bitbucket_pipes_toolkit.Pipe(pipe_metadata=None, pipe_metadata_file=None, schema=None, env=None, logger=<RootLogger root (INFO)>, check_for_newer_version=False)[source]Base class for all pipes. Provides utitilfdfites to work with configuration, validation etc.

(…)

envDict-like object containing pipe parameters. This is usually the environment variables that you get from os.environ

Type:

dict

https://bitbucket-pipes-toolkit.readthedocs.io

Hope this helps.

Yves

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Hi,

I am also facing the same issue as mentioned in below:

I also tried to pass the variables through the repository variables but the values are not getting passed to the docker container. Not sure what is the right way to achieve this...

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Was this helpful?

Thanks!

- FAQ

- Community Guidelines

- About

- Privacy policy

- Notice at Collection

- Terms of use

- © 2024 Atlassian

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.